Archive for the ‘OpenGL’ Category

Homage to Structure Synth

This post is a follow-up to something I wrote exactly one year ago: Mesh Generation with Python.

In the old post, I discussed a number of ways to generate 3D shapes in Python, and I posted some OpenGL screenshots to show off the geometry.

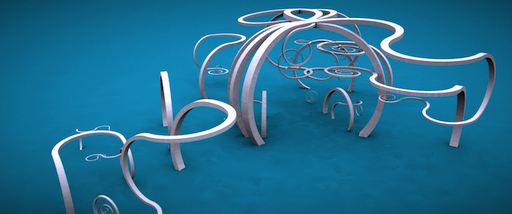

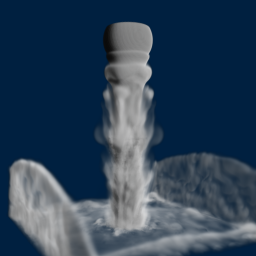

Since this is my first post since joining Pixar, I figured it would be fun to use RenderMan rather than OpenGL to show off some new procedurally-generated shapes. Sure, my new images don’t render at interactive rates, but they’re nice and high-quality:

Beautiful Lindenmayer Systems

My favorite L-system from Mikael Hvidtfeldt Christensen is his Nouveau Variation; when I first saw it, I stared at it for half an hour; it’s hypnotically mathematical and surreal. He generates his art using his own software, Structure Synth.

To make similar creations without the help of Mikael’s software, I use the same XML format and Python script that I showed off in my 2010 post (here), with an extension to switch from one rule to another when a particular rule’s maximum depth is reached.

The other improvement I made was in the implementation itself; rather than using recursion to evaluate the L-system rules, I use a Python list as a stack. This turned out to simplify the code.

Here’s the XML representation of Mikael’s beautiful “Nouveau” L-system, which I used to generate all the images on this page:

<rules max_depth="1000">

<rule name="entry">

<call count="16" transforms="rz 20" rule="hbox"/>

</rule>

<rule name="hbox"><call rule="r"/></rule>

<rule name="r"><call rule="forward"/></rule>

<rule name="r"><call rule="turn"/></rule>

<rule name="r"><call rule="turn2"/></rule>

<rule name="r"><call rule="turn4"/></rule>

<rule name="r"><call rule="turn3"/></rule>

<rule name="forward" max_depth="90" successor="r">

<call rule="dbox"/>

<call transforms="rz 2 tx 0.1 sa 0.996" rule="forward"/>

</rule>

<rule name="turn" max_depth="90" successor="r">

<call rule="dbox"/>

<call transforms="rz 2 tx 0.1 sa 0.996" rule="turn"/>

</rule>

<rule name="turn2" max_depth="90" successor="r">

<call rule="dbox"/>

<call transforms="rz -2 tx 0.1 sa 0.996" rule="turn2"/>

</rule>

<rule name="turn3" max_depth="90" successor="r">

<call rule="dbox"/>

<call transforms="ry -2 tx 0.1 sa 0.996" rule="turn3"/>

</rule>

<rule name="turn4" max_depth="90" successor="r">

<call rule="dbox"/>

<call transforms="ry -2 tx 0.1 sa 0.996" rule="turn4"/>

</rule>

<rule name="turn5" max_depth="90" successor="r">

<call rule="dbox"/>

<call transforms="rx -2 tx 0.1 sa 0.996" rule="turn5"/>

</rule>

<rule name="turn6" max_depth="90" successor="r">

<call rule="dbox"/>

<call transforms="rx -2 tx 0.1 sa 0.996" rule="turn6"/>

</rule>

<rule name="dbox">

<instance transforms="s 2.0 2.0 1.25" shape="boxy"/>

</rule>

</rules>

Python Snippets for RenderMan

RenderMan lets you assign names to gprims, which I find useful when it reports errors. RenderMan also lets you assign gprims to various “ray groups”, which is nice when you’re using AO or Global Illumination; it lets you include/exclude certain geometry from ray-casts.

Given these two labels (an identifier and a ray group), I found it useful to extend RenderMan’s Python binding with a utility function:

def SetLabel( self, label, groups = '' ):

"""Sets the id and ray group(s) for subsequent gprims"""

self.Attribute(self.IDENTIFIER,{self.NAME:label})

if groups != '':

self.Attribute("grouping",{"membership":groups})

Since Python lets you dynamically add methods to a class, you can graft the above function onto the Ri class like so:

prman.Ri.SetLabel = SetLabel

ri = prman.Ri()

# do some init stuff....

ri.SetLabel("MyHeroShape", "MovingGroup")

# instance a gprim...

I also found it useful to write some Python functions that simply called out to external processes, like the RSL compiler and the brickmap utility:

def CompileShader(shader):

"""Compiles the given RSL file"""

print 'Compiling %s...' % shader

retval = os.system("shader %s.sl" % shader)

if retval:

quit()

def CreateBrickmap(base):

"""Creates a brick map from a point cloud"""

if os.path.exists('%s.bkm' % base):

print "Found brickmap for %s" % base

else:

print "Creating brickmap for %s..." % base

if not os.path.exists('%s.ptc' % base):

print "Error: %s.ptc has not been generated." % base

else:

os.system("brickmake %s.ptc %s.bkm" % (base, base))

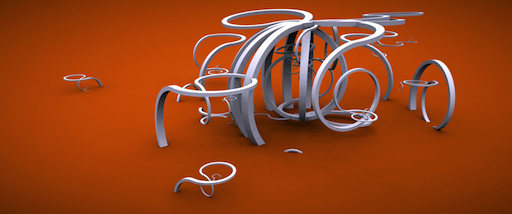

Here’s another image of the same L-system, using a different random seed:

Reminds me of Salvadore Dalí…

Some RSL Fun

The RenderMan stuff was fairly straightforward. I’m using a bog-standard AO shader:

class ComputeOcclusion(string hitgroup = "";

color em = (1,0,1);

float samples = 64)

{

public void surface(output color Ci, Oi)

{

normal Nn = normalize(N);

float occ = occlusion(P, Nn, samples,

"maxdist", 100.0,

"subset", hitgroup);

Ci = (1 - occ) * Cs * Os;

Ci *= em;

Oi = Os;

}

}

Hurray, that was my first RSL code snippet on the blog! I’m also using an imager shader to achieve the same vignette effect that Mikael used for his artwork. It just applies a radial dimming operation and adds a bit of noise to give it a photographic look:

class Vignette()

{

public void imager(output varying color Ci; output varying color Oi)

{

float x = s - 0.5;

float y = t - 0.5;

float d = x * x + y * y;

Ci *= 1.0 - d;

float n = (cellnoise(s*1000, t*1000) - 0.5);

Ci += n * 0.025;

}

}

Downloads

Here are my Python scripts and RSL code for your enjoyment:

Gallery

Deep Opacity Maps

In the video, the light source is slowly spinning around the smoke stack, showing that a deep opacity map is generated at every frame. For now it’s being computed rather inefficiently, by performing a full raycast over each voxel in the light map. Here’s my fragment shader for generating the opacity map:

in float gLayer;

out float FragColor;

uniform sampler3D Density;

uniform vec3 LightPosition = vec3(1.0, 1.0, 2.0);

uniform float LightIntensity = 10.0;

uniform float Absorption = 10.0;

uniform float LightStep;

uniform int LightSamples;

uniform vec3 InverseSize;

void main()

{

vec3 pos = InverseSize * vec3(gl_FragCoord.xy, gLayer);

vec3 lightDir = normalize(LightPosition-pos) * LightStep;

float Tl = 1.0;

vec3 lpos = pos + lightDir;

for (int s = 0; s < LightSamples; ++s) {

float ld = texture(Density, pos).x;

Tl *= 1.0 - Absorption * LightStep * ld;

if (Tl <= 0.01)

break;

// Would be faster if this conditional is replaced with a tighter loop

if (lpos.x < 0 || lpos.y < 0 || lpos.z < 0 ||

lpos.x > 1 || lpos.y > 1 || lpos.z > 1)

break;

lpos += lightDir;

}

float Li = LightIntensity*Tl;

FragColor = Li;

}

It would be much more efficient to align the light volume with the light direction, allowing you to remove the loop and accumulate results from the previous layer. Normally you can’t sample from the same texture that you’re rendering to, but in this case it would be a different layer of the FBO, so it would be legal.

Another potential optimization is using MRT with 8 render targets, and packing 4 depths into each layer; this would generate 32 layers per instance rather than just one!

Here’s another video just for fun, taken with a higher resolution grid. It doesn’t run at interactive rates (I forced the video to 30 fps) but the crepuscular shadow rays sure look cool:

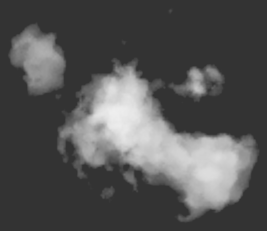

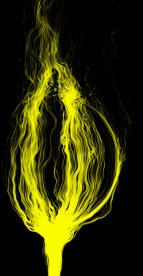

Noise-Based Particles, Part II

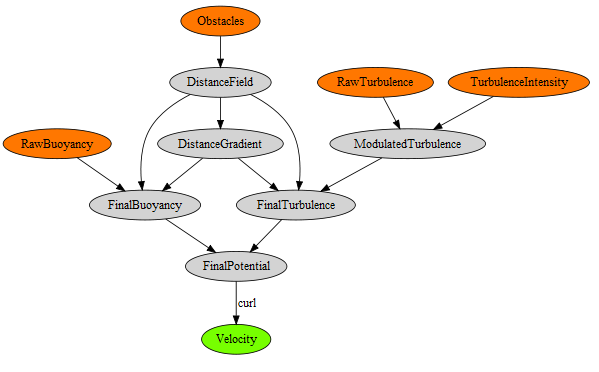

In Part I, I covered Bridson’s “curl noise” method for particle advection, describing how to build a static grid of velocity vectors. I portrayed the construction process as an acyclic image processing graph, where the inputs are volumetric representations of obstacles and turbulence.

The demo code in Part I was a bit lame, since it moved particles on the CPU. In this post, I show how to perform advection on the GPU using GL_TRANSFORM_FEEDBACK. For more complex particle management, I’d probably opt for OpenCL/CUDA, but for something this simple, transform feedback is the easiest route to take.

Initialization

In my particle simulation, the number of particles remains fairly constant, so I decided to keep it simple by ping-ponging two staticly-sized VBOs. The beauty of transform feedback is that the two VBOs can stay in dedicated graphics memory; no bus travel.

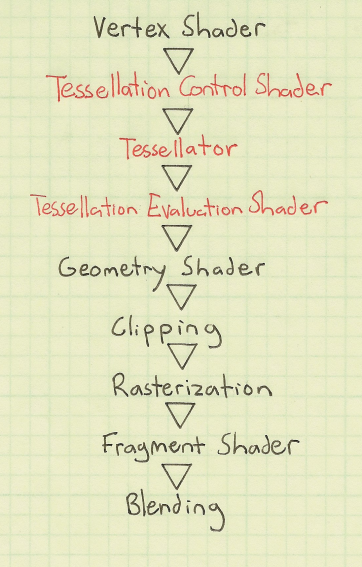

In the days before transform feedback (and CUDA), the only way to achieve GPU-based advection was sneaky usage of the fragment shader and a one-dimensional FBO. Those days are long gone — OpenGL now allows you to effectively shut off the rasterizer, performing advection completely in the vertex shader and/or geometry shader.

The first step is creating the two ping-pong VBOs, which is done like you’d expect:

GLuint ParticleBufferA, ParticleBufferB;

const int ParticleCount = 100000;

ParticlePod seed_particles[ParticleCount] = { ... };

// Create VBO for input on even-numbered frames and output on odd-numbered frames:

glGenBuffers(1, &ParticleBufferA);

glBindBuffer(GL_ARRAY_BUFFER, ParticleBufferA);

glBufferData(GL_ARRAY_BUFFER, sizeof(seed_particles), &seed_particles[0], GL_STREAM_DRAW);

glBindBuffer(GL_ARRAY_BUFFER, 0);

// Create VBO for output on even-numbered frames and input on odd-numbered frames:

glGenBuffers(1, &ParticleBufferB);

glBindBuffer(GL_ARRAY_BUFFER, ParticleBufferB);

glBufferData(GL_ARRAY_BUFFER, sizeof(seed_particles), 0, GL_STREAM_DRAW);

glBindBuffer(GL_ARRAY_BUFFER, 0);

Note that I provided some initial seed data in ParticleBufferA, but I left ParticleBufferB uninitialized. This initial seeding is the only CPU-GPU transfer in the demo.

By the way, I don’t think the GL_STREAM_DRAW hint really matters; most drivers are smart enough to manage memory in a way that they think is best.

The only other initialization task is binding the outputs from the vertex shader (or geometry shader). Watch out because this needs to take place after you compile the shaders, but before you link them:

GLuint programHandle = glCreateProgram();

GLuint vsHandle = glCreateShader(GL_VERTEX_SHADER);

glShaderSource(vsHandle, 1, &vsSource, 0);

glCompileShader(vsHandle);

glAttachShader(programHandle, vsHandle);

const char* varyings[3] = { "vPosition", "vBirthTime", "vVelocity" };

glTransformFeedbackVaryings(programHandle, 3, varyings, GL_INTERLEAVED_ATTRIBS);

glLinkProgram(programHandle);

Yep, that’s a OpenGL program object that only has a vertex shader attached; no fragment shader!

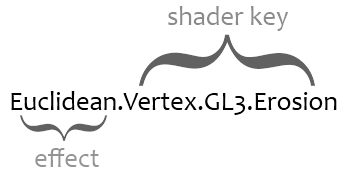

I realize it smells suspiciously like Hungarian, but I like to prefix my vertex shader outputs with a lowercase “v”, geometry shader outputs with lowercase “g”, etc. It helps me avoid naming collisions when trickling a value through the entire pipe.

Advection Shader

The vertex shader for noise-based advection is crazy simple. I stole the randhash function from a Robert Bridson demo; it was surprisingly easy to port to GLSL.

-- Vertex Shader

in vec3 Position;

in float BirthTime;

in vec3 Velocity;

out vec3 vPosition;

out float vBirthTime;

out vec3 vVelocity;

uniform sampler3D Sampler;

uniform vec3 Size;

uniform vec3 Extent;

uniform float Time;

uniform float TimeStep = 5.0;

uniform float InitialBand = 0.1;

uniform float SeedRadius = 0.25;

uniform float PlumeCeiling = 3.0;

uniform float PlumeBase = -3;

const float TwoPi = 6.28318530718;

const float InverseMaxInt = 1.0 / 4294967295.0;

float randhash(uint seed, float b)

{

uint i=(seed^12345391u)*2654435769u;

i^=(i<<6u)^(i>>26u);

i*=2654435769u;

i+=(i<<5u)^(i>>12u);

return float(b * i) * InverseMaxInt;

}

vec3 SampleVelocity(vec3 p)

{

vec3 tc;

tc.x = (p.x + Extent.x) / (2 * Extent.x);

tc.y = (p.y + Extent.y) / (2 * Extent.y);

tc.z = (p.z + Extent.z) / (2 * Extent.z);

return texture(Sampler, tc).xyz;

}

void main()

{

vPosition = Position;

vBirthTime = BirthTime;

// Seed a new particle as soon as an old one dies:

if (BirthTime == 0.0 || Position.y > PlumeCeiling) {

uint seed = uint(Time * 1000.0) + uint(gl_VertexID);

float theta = randhashf(seed++, TwoPi);

float r = randhashf(seed++, SeedRadius);

float y = randhashf(seed++, InitialBand);

vPosition.x = r * cos(theta);

vPosition.y = PlumeBase + y;

vPosition.z = r * sin(theta);

vBirthTime = Time;

}

// Advance the particle using an additional half-step to reduce numerical issues:

vVelocity = SampleVelocity(Position);

vec3 midx = Position + 0.5f * TimeStep * vVelocity;

vVelocity = SampleVelocity(midx);

vPosition += TimeStep * vVelocity;

}

Note the sneaky usage of gl_VertexID to help randomize the seed. Cool eh?

Using Transform Feedback

Now let’s see how to apply the above shader from your application code. You’ll need to use three functions that you might not be familiar with: glBindBufferBase specifies the target VBO, and gl{Begin/End}TransformFeedback delimits the draw call that performs advection. I’ve highlighted these calls below, along with the new enable that allows you to turn off rasterization:

// Set up the advection shader: glUseProgram(ParticleAdvectProgram); glUniform1f(ParticleAdvectProgram, timeLoc, currentTime); // Specify the source buffer: glEnable(GL_RASTERIZER_DISCARD); glBindBuffer(GL_ARRAY_BUFFER, ParticleBufferA); glEnableVertexAttribArray(SlotPosition); glEnableVertexAttribArray(SlotBirthTime); char* pOffset = 0; glVertexAttribPointer(SlotPosition, 3, GL_FLOAT, GL_FALSE, 16, pOffset); glVertexAttribPointer(SlotBirthTime, 1, GL_FLOAT, GL_FALSE, 16, 12 + pOffset); // Specify the target buffer: glBindBufferBase(GL_TRANSFORM_FEEDBACK_BUFFER, 0, ParticleBufferB); // Perform GPU advection: glBeginTransformFeedback(GL_POINTS); glBindTexture(GL_TEXTURE_3D, VelocityTexture.Handle); glDrawArrays(GL_POINTS, 0, ParticleCount); glEndTransformFeedback(); // Swap the A and B buffers for ping-ponging, then turn the rasterizer back on: std::swap(ParticleBufferA, ParticleBufferB); glDisable(GL_RASTERIZER_DISCARD);

The last step is actually rendering the post-transformed particles:

glUseProgram(ParticleRenderProgram); glBindBuffer(GL_ARRAY_BUFFER, ParticleBufferA); glVertexAttribPointer(SlotPosition, 3, GL_FLOAT, GL_FALSE, 16, pOffset); glVertexAttribPointer(SlotBirthTime, 1, GL_FLOAT, GL_FALSE, 16, 12 + pOffset); glDrawArrays(GL_POINTS, 0, ParticleCount);

In my case, rendering the particles was definitely the bottleneck; the advection was insanely fast. As covered in Part I, I use the geometry shader to extrude points into view-aligned billboards that get stretched according to the velocity vector. An interesting extension to this approach would be to keep a short history (3 or 4 positions) with each particle, allowing nice particle trails, also known as “particle traces”. This brings back memories of the ASCII snake games of my youth (does anyone remember QBasic Nibbles?)

Well, that’s about it! Please realize that I’ve only covered the simplest possible usage of transform feedback. OpenGL 4.0 introduced much richer functionality, allowing you to intermingle several VBOs in any way you like, and executing draw calls without any knowledge of buffer size. If you want to learn more, check out this nice write-up from Christophe Riccio, where he describes the evolution of transform feedback:

http://www.g-truc.net/post-0269.html

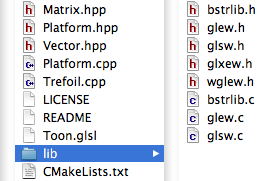

Downloads

The first time you run my demo, it’ll take a while to initialize because it needs to construct the velocity field. On subsequent runs, it loads the data from an external file, so it starts up quickly. Note that I’m using the CPU to generate the velocity field; performing it on the GPU would be much, much faster.

I’ve tested the code with Visual Studio 2010. It uses CMake for the build system.

The code is released under the unlicense license.

3D Eulerian Grid

I finally extended my OpenGL-based Eulerian fluid simulation to 3D. It’s not as pretty as Miles Macklin’s stuff (here), but at least it runs at interactive rates on my GeForce GTS 450, which isn’t exactly top-of-the-line. My original blog entry, “Simple Fluid Simulation”, goes into much more detail than this post.

I thought it would be fun to invert buoyancy and gravity to make it look more like liquid nitrogen than smoke. The source code can be downloaded at the end of this post.

Extending to three dimensions was fairly straightforward. I’m still using a half-float format for my velocity texture, but I’ve obviously made it 3D and changed its format to GL_RGB16F (previously it was GL_RG16F).

The volumetric image processing is achieved by ping-ponging layered FBOs, using instanced rendering to update all slices of a volume with a single draw call and a tiny 4-vert VBO, like this:

glDrawArraysInstanced(GL_TRIANGLE_STRIP, 0, 4, numLayers);

Here are the vertex and geometry shaders that I use to make this work:

-- Vertex Shader

in vec4 Position;

out int vInstance;

void main()

{

gl_Position = Position;

vInstance = gl_InstanceID;

}

-- Geometry Shader

layout(triangles) in;

layout(triangle_strip, max_vertices = 3) out;

in int vInstance[3];

out float gLayer;

uniform float InverseSize;

void main()

{

gl_Layer = vInstance[0];

gLayer = float(gl_Layer) + 0.5;

gl_Position = gl_in[0].gl_Position;

EmitVertex();

gl_Position = gl_in[1].gl_Position;

EmitVertex();

gl_Position = gl_in[2].gl_Position;

EmitVertex();

EndPrimitive();

}

Note that I offset the layer by 0.5. I’m too lazy to pass the entire texture coordinate to the fragment shader, so my fragment shader uses a gl_FragCoord trick to get to the right texture coordinate:

-- Fragment Shader

uniform vec3 InverseSize;

uniform sampler3D VelocityTexture;

void main()

{

vec3 tc = InverseSize * vec3(gl_FragCoord.xy, gLayer);

vec3 velocity = texture(VelocityTexture, tc).xyz;

// ...

}

Since the fragment shader gets evaluated at pixel centers, I needed the 0.5 offset on the layer coordinate to prevent the fluid from “drifting” along the Z axis.

The fragment shaders are otherwise pretty damn similar to their 2D counterparts. As an example, I’ll show you how I extended the SubtractGradient shader, which computes the gradient vector for pressure. Previously it did something like this:

float N = texelFetchOffset(Pressure, T, 0, ivec2(0, 1)).r; float S = texelFetchOffset(Pressure, T, 0, ivec2(0, -1)).r; float E = texelFetchOffset(Pressure, T, 0, ivec2(1, 0)).r; float W = texelFetchOffset(Pressure, T, 0, ivec2(-1, 0)).r; vec2 oldVelocity = texelFetch(Velocity, T, 0).xy; vec2 gradient = vec2(E - W, N - S) * GradientScale; vec2 newVelocity = oldVelocity - gradient;

The new code does something like this:

float N = texelFetchOffset(Pressure, T, 0, ivec3(0, 1, 0)).r; float S = texelFetchOffset(Pressure, T, 0, ivec3(0, -1, 0)).r; float E = texelFetchOffset(Pressure, T, 0, ivec3(1, 0, 0)).r; float W = texelFetchOffset(Pressure, T, 0, ivec3(-1, 0, 0)).r; float U = texelFetchOffset(Pressure, T, 0, ivec3(0, 0, 1)).r; float D = texelFetchOffset(Pressure, T, 0, ivec3(0, 0, -1)).r; vec3 oldVelocity = texelFetch(Velocity, T, 0).xyz; vec3 gradient = vec3(E - W, N - S, U - D) * GradientScale; vec3 newVelocity = oldVelocity - gradient;

Simple!

Downloads

I’ve tested the code with Visual Studio 2010. It uses CMake for the build system. If you have trouble building it or running it, let me warn you that you need a decent graphics card and decent OpenGL drivers.

I consider this code to be on the public domain. Enjoy!

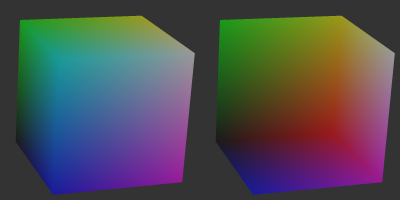

Single-Pass Raycasting

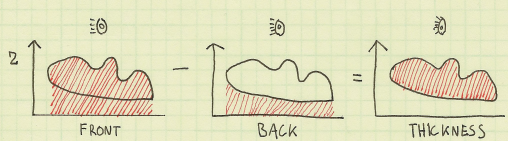

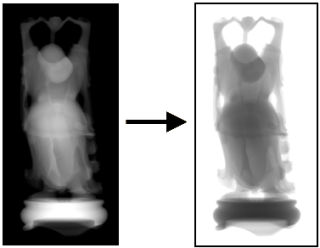

Raycasting over a volume requires start and stop points for your rays. The traditional method for computing these intervals is to draw a cube (with perspective) into two surfaces: one surface has front faces, the other has back faces. By using a fragment shader that writes object-space XYZ into the RGB channels, you get intervals. Your final pass is the actual raycast.

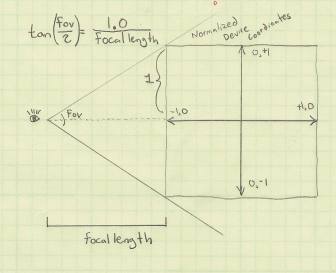

In the original incarnation of this post, I proposed making it into a single pass process by dilating a back-facing triangle from the cube and performing perspective-correct interpolation math in the fragment shader. Simon Green pointed out that this was a bit silly, since I can simply do a ray-cube intersection. So I rewrote this post, showing how to correlate a field-of-view angle (typically used to generate an OpenGL projection matrix) and focal length (typically used to determine ray direction). This might be useful to you if you need to integrate a raycast volume into an existing 3D scene that uses traditional rendering.

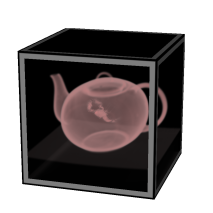

To have something interesting to render in the demo code (download is at the end of the post), I generated a pyroclastic cloud as described in this amazing PDF on volumetric methods from the 2010 SIGGRAPH course. Miles Macklin has a simply great blog entry about it here.

Recap of Two-Pass Raycasting

Here’s a depiction of the usual offscreen surfaces for ray intervals:

Front faces give you the start points and the back faces give you the end points. The usual procedure goes like this:

-

Draw a cube’s front faces into surface A and back faces into surface B. This determines ray intervals.

- Attach two textures to the current FBO to render to both surfaces simultaneously.

- Use a fragment shader that writes out normalized object-space coordinates to the RGB channels.

-

Draw a full-screen quad to perform the raycast.

- Bind three textures: the two interval surfaces, and the 3D texture you’re raycasting against.

- Sample the two interval surfaces to obtain ray start and stop points. If they’re equal, issue a discard.

Making it Single-Pass

To make this a one-pass process and remove two texture lookups from the fragment shader, we can use a procedure like this:

-

Draw a cube’s front-faces to perform the raycast.

- On the CPU, compute the eye position in object space and send it down as a uniform.

- Also on the CPU, compute a focal length based on the field-of-view that you’re using to generate your scene’s projection matrix.

- At the top of the fragment shader, perform a ray-cube intersection.

Raycasting on front-faces instead of a full-screen quad allows you to avoid the need to test for intersection failure. Traditional raycasting shaders issue a discard if there’s no intersection with the view volume, but since we’re guaranteed to hit the viewing volume, so there’s no need.

Without further ado, here’s my fragment shader using modern GLSL syntax:

out vec4 FragColor;

uniform mat4 Modelview;

uniform float FocalLength;

uniform vec2 WindowSize;

uniform vec3 RayOrigin;

struct Ray {

vec3 Origin;

vec3 Dir;

};

struct AABB {

vec3 Min;

vec3 Max;

};

bool IntersectBox(Ray r, AABB aabb, out float t0, out float t1)

{

vec3 invR = 1.0 / r.Dir;

vec3 tbot = invR * (aabb.Min-r.Origin);

vec3 ttop = invR * (aabb.Max-r.Origin);

vec3 tmin = min(ttop, tbot);

vec3 tmax = max(ttop, tbot);

vec2 t = max(tmin.xx, tmin.yz);

t0 = max(t.x, t.y);

t = min(tmax.xx, tmax.yz);

t1 = min(t.x, t.y);

return t0 <= t1;

}

void main()

{

vec3 rayDirection;

rayDirection.xy = 2.0 * gl_FragCoord.xy / WindowSize - 1.0;

rayDirection.z = -FocalLength;

rayDirection = (vec4(rayDirection, 0) * Modelview).xyz;

Ray eye = Ray( RayOrigin, normalize(rayDirection) );

AABB aabb = AABB(vec3(-1.0), vec3(+1.0));

float tnear, tfar;

IntersectBox(eye, aabb, tnear, tfar);

if (tnear < 0.0) tnear = 0.0;

vec3 rayStart = eye.Origin + eye.Dir * tnear;

vec3 rayStop = eye.Origin + eye.Dir * tfar;

// Transform from object space to texture coordinate space:

rayStart = 0.5 * (rayStart + 1.0);

rayStop = 0.5 * (rayStop + 1.0);

// Perform the ray marching:

vec3 pos = rayStart;

vec3 step = normalize(rayStop-rayStart) * stepSize;

float travel = distance(rayStop, rayStart);

for (int i=0; i < MaxSamples && travel > 0.0; ++i, pos += step, travel -= stepSize) {

// ...lighting and absorption stuff here...

}

The shader works by using gl_FragCoord and a given FocalLength value to generate a ray direction. Just like a traditional CPU-based raytracer, the appropriate analogy is to imagine holding a square piece of chicken wire in front of you, tracing rays from your eyes through the holes in the mesh.

If you’re integrating the raycast volume into an existing scene, computing FocalLength and RayOrigin can be a little tricky, but it shouldn’t be too difficult. Here’s a little sketch I made:

In days of yore, most OpenGL programmers would use the gluPerspective function to compute a projection matrix, although nowadays you’re probably using whatever vector math library you happen to be using. My personal favorite is the simple C++ vector library from Sony that’s included in Bullet. Anyway, you’re probably calling a function that takes a field-of-view angle as an argument:

Matrix4 Perspective(float fovy, float aspectRatio, float nearPlane, float farPlane);

Based on the above diagram, converting the fov value into a focal length is easy:

float focalLength = 1.0f / tan(FieldOfView / 2);

You’re also probably calling function kinda like gluLookAt to compute your view matrix:

Matrix4 LookAt(Point3 eyePosition, Point3 targetPosition, Vector3 up);

To compute a ray origin, transform the eye position from world space into object space, relative to the viewing cube.

Downloads

I’ve tested the code with Visual Studio 2010. It uses CMake for the build system.

I consider this code to be on the public domain. Enjoy!

Noise-Based Particles, Part I

Robert Bridson, that esteemed guru of fluid simulation, wrote a short-n-sweet 2007 SIGGRAPH paper on using Perlin noise to create turbulent effects in a divergence-free velocity field (PDF). Divergence-free means that the velocity field conforms to the incompressibility aspect of Navier-Stokes, making it somewhat believable from a physics standpoint. It’s a nice way to fake a fluid if you don’t have enough horsepower for a more rigorous physically-based system, such as the Eulerian grid in my Simple Fluid Simulation post.

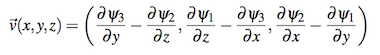

The basic idea is to introduce noise into a “potential field”, then derive velocity from the potential field using the curl operator. Here’s an expansion of the curl operator that shows how to obtain 3D velocity from potential:

The fact that we call it “potential” and that we’re using a Ψ symbol isn’t really important. The point is to use whatever crazy values we want for our potential field and not to worry about it. As long as we keep the potential smooth (no discontinuities), it’s legal. That’s because we’re taking its curl to obtain velocity, and the curl of any smooth field is always divergence-free:

Implementation

You really only need four ingredients for this recipe:

- Distance field for the rigid obstacles in your scene

- Intensity map representing areas where you’d like to inject turbulence

- vec3-to-vec3 function for turbulent noise (gets multiplied by the above map)

- Simple, smooth field of vectors for starting potential (e.g., buoyancy)

For the third bullet, a nice-looking result can be obtained by summing up three octaves of Perlin noise. For GPU-based particles, it can be represented with a 3D RGB texture. Technically the noise should be time-varying (an array of 3D textures), but in practice a single frame of noise seems good enough.

The fourth bullet is a bit tricky because the concept of “inverse curl” is dodgy; given a velocity field, you cannot recover a unique potential field from it. Luckily, for smoke, we simply need global upward velocities for buoyancy, and coming up with a reasonable source potential isn’t difficult. Conceptually, it helps to think of topo lines in the potential field as streamlines.

The final potential field is created by blending together the above four ingredients in a reasonable manner. I created an interactive diagram with graphviz that shows how the ingredients come together. If your browser supports SVG, you can click on the diagram, then click any node to see a visualization of the plane at Z=0.

If you’re storing the final velocity field in a 3D texture, most of the processing in the diagram needs to occur only once, at startup. The final velocity field can be static as long as your obstacles don’t move.

Obtaining velocity from the potential field is done with the curl operator; in pseudocode it looks like this:

Vector3 ComputeCurl(Point3 p)

{

const float e = 1e-4f;

Vector3 dx(e, 0, 0);

Vector3 dy(0, e, 0);

Vector3 dz(0, 0, e);

float x = SamplePotential(p + dy).z - SamplePotential(p - dy).z

- SamplePotential(p + dz).y + SamplePotential(p - dz).y;

float y = SamplePotential(p + dz).x - SamplePotential(p - dz).x

- SamplePotential(p + dx).z + SamplePotential(p - dx).z;

float z = SamplePotential(p + dx).y - SamplePotential(p - dx).y

- SamplePotential(p + dy).x + SamplePotential(p - dy).x;

return Vector3(x, y, z) / (2*e);

}

Equally useful is a ComputeGradient function, which is used against the distance field to obtain a value that can be mixed into the potential field:

Vector3 ComputeGradient(Point3 p)

{

const float e = 0.01f;

Vector3 dx(e, 0, 0);

Vector3 dy(0, e, 0);

Vector3 dz(0, 0, e);

float d = SampleDistance(p);

float dfdx = SampleDistance(p + dx) - d;

float dfdy = SampleDistance(p + dy) - d;

float dfdz = SampleDistance(p + dz) - d;

return normalize(Vector3(dfdx, dfdy, dfdz));

}

Blending the distance gradient into an existing potential field can be a bit tricky; here’s one way of doing it:

// Returns a modified potential vector, respecting the boundaries defined by distanceGradient

Vector3 BlendVectors(Vector3 potential, Vector3 distanceGradient, float alpha)

{

float dp = dot(potential, distanceGradient);

return alpha * potential + (1-alpha) * dp * distanceGradient;

}

The alpha parameter in the above snippet can be computed by applying a ramp function to the current distance value.

Motion Blur

Let’s turn now to some of the rendering issues involved. Let’s assume you’ve got very little horsepower, or you’re working in a very small time slice — this might be why you’re using a noise-based solution anyway. You might only be capable of a one-thousand particle system instead of a one-million particle system. For your particles to be space-filling, consider adding some motion blur.

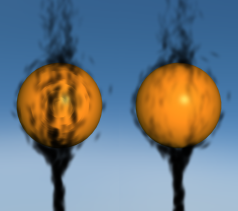

Motion blur might seem overkill at first, but since it inflates your billboards in an intelligent way, you can have fewer particles in your system. The image to the right (click to enlarge) shows how billboards can be stretched and oriented according to their velocities.

Note that it can be tricky to perform velocity alignment and still allow for certain viewing angles. For more on the subject (and some code), see this section of my previous blog entry.

Soft Particles

Another rendering issue that can crop up are hard edges. This occurs when you’re depth-testing billboards against obstacles in your scene — the effect is shown on the left in the image below.

Turns out that an excellent chapter in GPU Gems 3 discusses this issue. (Here’s a link to the online version of the chapter.) The basic idea is to fade alpha in your fragment shader according to the Z distance between the current fragment and the depth obstacle. This method is called soft particles, and you can see the result on the right in the comparison image.

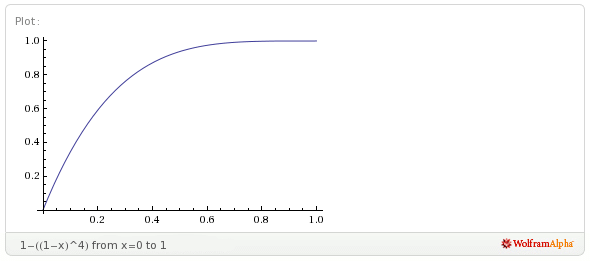

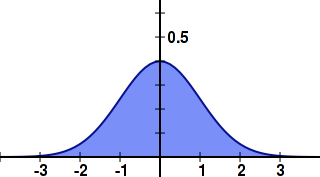

I found that using a linear fade-out can cause particles to appear too light. To alleviate this, I decided to apply a quartic falloff to the alpha value. This makes the particle stay opaque longer with rapid fall-off. When I’m prototyping simple functions like this, I like to use Wolfram Alpha as my web-based graphing calculator . Here’s one possibility (click to view in Wolfram Alpha):

It’s tempting to use trig functions when designing ramp functions like this, but keep in mind that they can be quite costly.

Without further ado, here’s the fragment shader that I used to render my smoke particles:

uniform vec4 Color;

uniform vec2 InverseSize;

varying float gAlpha;

varying vec2 gTexCoord;

uniform sampler2D SpriteSampler;

uniform sampler2D DepthSampler;

void main()

{

vec2 tc = gl_FragCoord.xy * InverseSize;

float depth = texture2D(DepthSampler, tc).r;

if (depth < gl_FragCoord.z)

discard;

float d = depth - gl_FragCoord.z;

float softness = 1.0 - min(1.0, 40.0 * d);

softness *= softness;

softness = 1.0 - softness * softness;

float A = gAlpha * texture2D(SpriteSampler, gTexCoord).a;

gl_FragColor = Color * vec4(1, 1, 1, A * softness);

}

There are actually three alpha values involved in the above snippet: alpha due to the particle’s lifetime (gAlpha), alpha due to the circular nature of the sprite (SpriteSampler), and alpha due to the proximity of the nearest depth boundary (softness).

If you’d like, you can avoid the SpriteSampler lookup by evaluating the Gaussian function in the shader; I took that approach in my previous blog entry.

Next up is the compositing fragment shader that I used to blend the particle billboards against the scene. When this shader is active, the app is drawing a full-screen quad.

varying vec2 vTexCoord;

uniform sampler2D BackgroundSampler;

uniform sampler2D ParticlesSampler;

void main()

{

vec4 dest = texture2D(BackgroundSampler, vTexCoord);

vec4 src = texture2D(ParticlesSampler, vTexCoord);

gl_FragColor.rgb = src.rgb * a + dest.rgb * (1.0 - a);

gl_FragColor.a = 1.0;

}

Here’s the blending function I use when drawing the particles:

glBlendFuncSeparate(GL_SRC_ALPHA, GL_ONE_MINUS_SRC_ALPHA, GL_ZERO, GL_ONE_MINUS_SRC_ALPHA);

The rationale for this is explained in the GPU gems chapter that I already mentioned.

Streamlines

Adding a streamline renderer to your app makes it easy to visualize turbulent effects. As seen in the video to the right, it’s also pretty fun to watch the streamlines grow. The easiest way to do this? Simply use very small billboards and remove the call to glClear! Well, you’ll probably still want to clear the surface at startup time, to prevent junk. Easy peasy!

Downloads

I’ve tested the code with Mac OS X and Windows with Visual Studio 2010. It uses CMake for the build system.

I consider this code to be on the public domain. Enjoy!

Tron, Volumetric Lines, and Meshless Tubes

With Tron: Legacy hitting theaters, I thought it’d be fun to write a post on volumetric line strips. They come in handy for a variety of effects (lightsabers, lightning, particle traces, etc.). In many cases, you can render volumetric lines by drawing thin lines into an offscreen surface, then blurring the entire surface. However, in some cases, screen-space blur won’t work out for you. Maybe you’re fill-rate bound, or maybe you need immediate depth-testing. You’d prefer a single-pass solution, and you want your volumetric lines to look great even when the lines are aligned to the viewing direction.

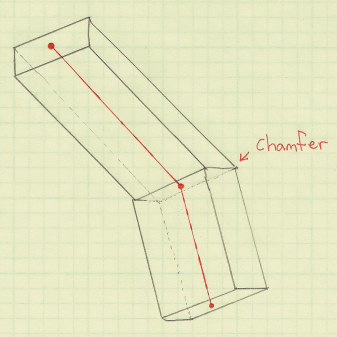

Geometry shaders to the rescue! By having the geometry shader emit a cuboid for each line segment, the fragment shader can perform a variety of effects within the screen-space region defined by the projected cuboid. This includes Gaussian splatting, alpha texturing, or even simple raytracing of cylinders or capsules. I stole the general idea from Sébastien Hillaire.

You can apply this technique to line strip primitives with or without adjacency. If you include adjacency, your geometry shader can chamfer the ends of the cuboid, turning the cuboid into a general prismoid and creating a tighter screen-space region.

I hate bloating vertex buffers with adjacency information. However, with GL_LINE_STRIP_ADJACENCY, adjacency incurs very little cost: simply add an extra vert to the beginning and end of your vertex buffer and you’re done! For more on adjacency, see my post on silhouettes or this interesting post on avoiding adjacency for terrain silhouettes.

For line strips, you might want to use GL_MAX blending rather than simple additive blending. This makes it easy to avoid extra glow at the joints.

Here’s a simplified version of the geometry shader, using old-school GLSL syntax to make Apple fans happy:

uniform mat4 ModelviewProjection;

varying in vec3 vPosition[4]; // Four inputs since we're using GL_LINE_STRIP_ADJACENCY

varying in vec3 vNormal[4]; // Orientation vectors are necessary for consistent alignment

vec4 prismoid[8]; // Scratch space for the eight corners of the prismoid

void emit(int a, int b, int c, int d)

{

gl_Position = prismoid[a]; EmitVertex();

gl_Position = prismoid[b]; EmitVertex();

gl_Position = prismoid[c]; EmitVertex();

gl_Position = prismoid[d]; EmitVertex();

EndPrimitive();

}

void main()

{

// Compute orientation vectors for the two connecting faces:

vec3 p0, p1, p2, p3;

p0 = vPosition[0]; p1 = vPosition[1];

p2 = vPosition[2]; p3 = vPosition[3];

vec3 n0 = normalize(p1-p0);

vec3 n1 = normalize(p2-p1);

vec3 n2 = normalize(p3-p2);

vec3 u = normalize(n0+n1);

vec3 v = normalize(n1+n2);

// Declare scratch variables for basis vectors:

vec3 i,j,k; float r = Radius;

// Compute face 1 of 2:

j = u; i = vNormal[1]; k = cross(i, j); i *= r; k *= r;

prismoid[0] = ModelviewProjection * vec4(p1 + i + k, 1);

prismoid[1] = ModelviewProjection * vec4(p1 + i - k, 1);

prismoid[2] = ModelviewProjection * vec4(p1 - i - k, 1);

prismoid[3] = ModelviewProjection * vec4(p1 - i + k, 1);

// Compute face 2 of 2:

j = v; i = vNormal[2]; k = cross(i, j); i *= r; k *= r;

prismoid[4] = ModelviewProjection * vec4(p2 + i + k, 1);

prismoid[5] = ModelviewProjection * vec4(p2 + i - k, 1);

prismoid[6] = ModelviewProjection * vec4(p2 - i - k, 1);

prismoid[7] = ModelviewProjection * vec4(p2 - i + k, 1);

// Emit the six faces of the prismoid:

emit(0,1,3,2); emit(5,4,6,7);

emit(4,5,0,1); emit(3,2,7,6);

emit(0,3,4,7); emit(2,1,6,5);

}

Glow With Point-Line Distance

Here’s my fragment shader for Tron-like glow. For this to link properly, the geometry shader needs to output some screen-space coordinates for the nodes in the line strip (gEndpoints[2] and gPosition). The fragment shader simply computes a point-line distance, using the result for the pixel’s intensity. It’s actually computing distance as “point-to-segment” rather than “point-to-infinite-line”. If you prefer the latter, you might be able to make an optimization by moving the distance computation up into the geometry shader.

uniform vec4 Color;

varying vec2 gEndpoints[2];

varying vec2 gPosition;

uniform float Radius;

uniform mat4 Projection;

// Return distance from point 'p' to line segment 'a b':

float line_distance(vec2 p, vec2 a, vec2 b)

{

float dist = distance(a,b);

vec2 v = normalize(b-a);

float t = dot(v,p-a);

vec2 spinePoint;

if (t > dist) spinePoint = b;

else if (t > 0.0) spinePoint = a + t*v;

else spinePoint = a;

return distance(p,spinePoint);

}

void main()

{

float d = line_distance(gPosition, gEndpoints[0], gEndpoints[1]);

gl_FragColor = vec4(vec3(1.0 - 12.0 * d), 1.0);

}

Raytraced Cylindrical Imposters

I’m not sure how useful this is, but you can actually go a step further and perform a ray-cylinder intersection test in your fragment shader and use the surface normal to perform lighting. The result: triangle-free tubes! By writing to the gl_FragDepth variable, you can enable depth-testing, and your tubes integrate into the scene like magic; no tessellation required. Here’s an excerpt from my fragment shader. (For the full shader, download the source code at the end of the article.)

vec3 perp(vec3 v)

{

vec3 b = cross(v, vec3(0, 0, 1));

if (dot(b, b) < 0.01)

b = cross(v, vec3(0, 1, 0));

return b;

}

bool IntersectCylinder(vec3 origin, vec3 dir, out float t)

{

vec3 A = gEndpoints[1]; vec3 B = gEndpoints[2];

float Epsilon = 0.0000001;

float extent = distance(A, B);

vec3 W = (B - A) / extent;

vec3 U = perp(W);

vec3 V = cross(U, W);

U = normalize(cross(V, W));

V = normalize(V);

float rSqr = Radius*Radius;

vec3 diff = origin - 0.5 * (A + B);

mat3 basis = mat3(U, V, W);

vec3 P = diff * basis;

float dz = dot(W, dir);

if (abs(dz) >= 1.0 - Epsilon) {

float radialSqrDist = rSqr - P.x*P.x - P.y*P.y;

if (radialSqrDist < 0.0)

return false;

t = (dz > 0.0 ? -P.z : P.z) + extent * 0.5;

return true;

}

vec3 D = vec3(dot(U, dir), dot(V, dir), dz);

float a0 = P.x*P.x + P.y*P.y - rSqr;

float a1 = P.x*D.x + P.y*D.y;

float a2 = D.x*D.x + D.y*D.y;

float discr = a1*a1 - a0*a2;

if (discr < 0.0)

return false;

if (discr > Epsilon) {

float root = sqrt(discr);

float inv = 1.0/a2;

t = (-a1 + root)*inv;

return true;

}

t = -a1/a2;

return true;

}

Motion-Blurred Billboards

Emitting cuboids from the geometry shader are great fun, but they’re overkill for many tasks. If you want to render short particle traces, it’s easier just to emit a quad from your geometry shader. The quad can be oriented and stretched according to the screen-space projection of the particle’s velocity vector. The tricky part is handling degenerate conditions: velocity might be close to zero, or velocity might be Z-aligned.

One technique to deal with this is to interpolate the emitted quad between a vertically-aligned square and a velocity-aligned rectangle, and basing the lerping factor on the magnitude of the velocity vector projected into screen-space.

Here’s the full geometry shader and fragment shader for motion-blurred particles. The geometry shader receives two endpoints of a line segment as input, and uses these to determine velocity.

-- Geometry Shader

varying in vec3 vPosition[2];

varying out vec2 gCoord;

uniform mat4 ModelviewProjection;

uniform float Radius;

uniform mat3 Modelview;

uniform mat4 Projection;

uniform float Time;

float Epsilon = 0.001;

void main()

{

vec3 p = mix(vPosition[0], vPosition[1], Time);

float w = Radius * 0.5;

float h = w * 2.0;

vec3 u = Modelview * (vPosition[1] - vPosition[0]);

// Determine 't', which represents Z-aligned magnitude.

// By default, t = 0.0.

// If velocity aligns with Z, increase t towards 1.0.

// If total speed is negligible, increase t towards 1.0.

float t = 0.0;

float nz = abs(normalize(u).z);

if (nz > 1.0 - Epsilon)

t = (nz - (1.0 - Epsilon)) / Epsilon;

else if (dot(u,u) < Epsilon)

t = (Epsilon - dot(u,u)) / Epsilon;

// Compute screen-space velocity:

u.z = 0.0;

u = normalize(u);

// Lerp the orientation vector if screen-space velocity is negligible:

u = normalize(mix(u, vec3(1,0,0), t));

h = mix(h, w, t);

// Compute the change-of-basis matrix for the billboard:

vec3 v = vec3(-u.y, u.x, 0);

vec3 a = u * Modelview;

vec3 b = v * Modelview;

vec3 c = cross(a, b);

mat3 basis = mat3(a, b, c);

// Compute the four offset vectors:

vec3 N = basis * vec3(0,w,0);

vec3 S = basis * vec3(0,-w,0);

vec3 E = basis * vec3(-h,0,0);

vec3 W = basis * vec3(h,0,0);

// Emit the quad:

gCoord = vec2(1,1); gl_Position = ModelviewProjection * vec4(p+N+E,1); EmitVertex();

gCoord = vec2(-1,1); gl_Position = ModelviewProjection * vec4(p+N+W,1); EmitVertex();

gCoord = vec2(1,-1); gl_Position = ModelviewProjection * vec4(p+S+E,1); EmitVertex();

gCoord = vec2(-1,-1); gl_Position = ModelviewProjection * vec4(p+S+W,1); EmitVertex();

EndPrimitive();

}

-- Fragment Shader

varying vec2 gCoord;

void main()

{

float r2 = dot(gCoord, gCoord);

float d = exp(r2 * -1.2); // Gaussian Splat

gl_FragColor = vec4(vec3(d), 1.0);

}

Diversion: Hilbert Cubed Sphere

You might’ve noticed the interesting path that I’ve used for my examples. This is a Hilbert curve drawn on the surface of a cube, with the cube deformed into a sphere. If you think of a Hilbert curve as a parametric function from R1 to R2, then any two points that are reasonably close in R1 are also reasonably close in R2. Cool huh? Here’s some C++ code for how I constructed the vertex buffer for the Hilbert cube:

struct Turtle {

void Move(float dx, float dy, bool changeFace = false) {

if (changeFace) ++Face;

switch (Face) {

case 0: P += Vector3(dx, dy, 0); break;

case 1: P += Vector3(0, dy, dx); break;

case 2: P += Vector3(-dy, 0, dx); break;

case 3: P += Vector3(0, -dy, dx); break;

case 4: P += Vector3(dy, 0, dx); break;

case 5: P += Vector3(dy, dx, 0); break;

}

HilbertPath.push_back(P);

}

Point3 P;

int Face;

};

static void HilbertU(int level)

{

if (level == 0) return;

HilbertD(level-1); HilbertTurtle.Move(0, -dist);

HilbertU(level-1); HilbertTurtle.Move(dist, 0);

HilbertU(level-1); HilbertTurtle.Move(0, dist);

HilbertC(level-1);

}

static void HilbertD(int level)

{

if (level == 0) return;

HilbertU(level-1); HilbertTurtle.Move(dist, 0);

HilbertD(level-1); HilbertTurtle.Move(0, -dist);

HilbertD(level-1); HilbertTurtle.Move(-dist, 0);

HilbertA(level-1);

}

static void HilbertC(int level)

{

if (level == 0) return;

HilbertA(level-1); HilbertTurtle.Move(-dist, 0);

HilbertC(level-1); HilbertTurtle.Move(0, dist);

HilbertC(level-1); HilbertTurtle.Move(dist, 0);

HilbertU(level-1);

}

static void HilbertA(int level)

{

if (level == 0) return;

HilbertC(level-1); HilbertTurtle.Move(0, dist);

HilbertA(level-1); HilbertTurtle.Move(-dist, 0);

HilbertA(level-1); HilbertTurtle.Move(0, -dist);

HilbertD(level-1);

}

void CreateHilbertCube(int lod)

{

HilbertU(lod); HilbertTurtle.Move(dist, 0, true);

HilbertU(lod); HilbertTurtle.Move(0, dist, true);

HilbertC(lod); HilbertTurtle.Move(0, dist, true);

HilbertC(lod); HilbertTurtle.Move(0, dist, true);

HilbertC(lod); HilbertTurtle.Move(dist, 0, true);

HilbertD(lod);

}

Deforming the cube into a sphere is easy: just normalize the positions!

Downloads

I tested the code on Mac OS X and on Windows. It uses CMake for the build system, and I consider the code to be on the public domain. A video of the app is embedded at the bottom of this page. Enjoy!

Volumetric Splatting

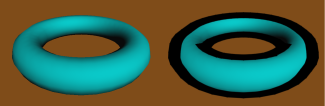

Instanced rendering turns out to be useful for volumetric graphics. I first came across the concept in this excellent chapter in GPU Gems 3, which briefly mentions that instancing can voxelize a model in only 1 draw call using nothing but a quad. After reading this, I had the idea of using instancing to efficiently render volumetric splats. Splatting is useful for creating distance fields and Voronoi maps. It can also be used to extrude a streamline into a space-filling velocity field.

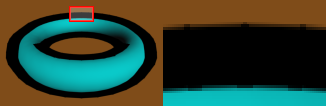

In this article, I show how volumetric splatting can be implemented efficiently with instancing. I show how to leverage splatting to extrude a circular streamline into a toroidal field of velocity vectors. This technique would allow an artist to design a large velocity field (e.g., for a particle system), simply by specifying a small animation path through space. My article also covers some basics of modern-day volume rendering on the GPU.

Volume Raycasting

Before I show you my raycasting shader, let me show you a neat trick to easily obtain the start and stop points for the rays. It works by drawing a cube into a pair of floating-point RGB surfaces, using a fragment shader that writes out object-space coordinates. Frontfaces go into one color attachment, backfaces in the other. This results in a tidy set of start/stop positions. Here are the shaders:

-- Vertex

in vec4 Position;

out vec3 vPosition;

uniform mat4 ModelviewProjection;

void main()

{

gl_Position = ModelviewProjection * Position;

vPosition = Position.xyz;

}

-- Fragment

in vec3 vPosition;

out vec3 FragData[2];

void main()

{

if (gl_FrontFacing) {

FragData[0] = 0.5 * (vPosition + 1.0);

FragData[1] = vec3(0);

} else {

FragData[0] = vec3(0);

FragData[1] = 0.5 * (vPosition + 1.0);

}

}

Update: I recently realized that the pair of endpoint surfaces can be avoided by performing a quick ray-cube intersection in the fragment shader. I wrote a blog entry about it here.

You’ll want to first clear the surfaces to black, and enable simple additive blending for this to work correctly. Note that we’re using multiple render targets (MRT) to generate both surfaces in a single pass. To pull this off, you’ll need to bind a FBO that has two color attachments, then issue a glDrawBuffers command before rendering, like this:

GLenum renderTargets[2] = {GL_COLOR_ATTACHMENT0, GL_COLOR_ATTACHMENT1};

glDrawBuffers(2, &renderTargets[0]);

// Render cube...

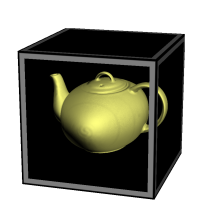

The next step is the actual raycasting, which is done by drawing a fullscreen quad with a longish fragment shader. We need to set up three texture samplers: two for the start/stop surfaces and one for the 3D volume texture itself. The following fragment shader performs raycasts against a single-channel 3D texture, which I used to generate the teapot image to the right. I obtained the scorpion-in-teapot volume data from this site at the University of Tübingen.

uniform sampler2D RayStart;

uniform sampler2D RayStop;

uniform sampler3D Volume;

out vec4 FragColor;

in vec3 vPosition;

uniform float StepLength = 0.01;

void main()

{

vec2 coord = 0.5 * (vPosition.xy + 1.0);

vec3 rayStart = texture(RayStart, coord).xyz;

vec3 rayStop = texture(RayStop, coord).xyz;

if (rayStart == rayStop) {

discard;

return;

}

vec3 ray = rayStop - rayStart;

float rayLength = length(ray);

vec3 stepVector = StepLength * ray/rayLength;

vec3 pos = rayStart;

vec4 dst = vec4(0);

while (dst.a < 1 && rayLength > 0) {

float density = texture(Volume, pos).x;

vec4 src = vec4(density);

src.rgb *= src.a;

dst = (1.0 - dst.a) * src + dst;

pos += stepVector;

rayLength -= StepLength;

}

FragColor = dst;

}

Note the front-to-back blending equation inside the while loop; Benjamin Supnik has a good article about front-to-back blending on his blog. One advantage of front-to-back raycasting: it allows you to break out of the loop on fully-opaque voxels.

Volumetric Lighting

You’ll often want to create a more traditional lighting effect in your raycaster. For this, you’ll need to obtain surface normals somehow. Since we’re dealing with volume data, this might seem non-trivial, but it’s actually pretty simple.

Really there are two problems to solve: (1) detecting voxels that intersect surfaces in the volume data, and (2) computing the normal vectors at those positions. Turns out both of these problems can be addressed with an essential concept from vector calculus: the gradient vector points in the direction of greatest change, and its magnitude represents the amount of change. If we can compute the gradient at a particular location, we can check its magnitude to see if we’re crossing a surface. And, conveniently enough, the direction of the gradient is exactly what we want to use for our lighting normal!

The gradient vector is made up of the partial derivatives along the three axes; it can be approximated like this:

vec3 ComputeGradient(vec3 P)

{

float L = StepLength;

float E = texture(VolumeSampler, P + vec3(L,0,0));

float N = texture(VolumeSampler, P + vec3(0,L,0));

float U = texture(VolumeSampler, P + vec3(0,0,L));

return vec3(E - V, N - V, U - V);

}

For the teapot data, we’ll compute the gradient for lighting normals only when the current voxel’s value is above a certain threshold. This lets us avoid making too many texture lookups. The shader looks like this:

out vec4 FragColor;

in vec3 vPosition;

uniform sampler2D RayStart;

uniform sampler2D RayStop;

uniform sampler3D Volume;

uniform float StepLength = 0.01;

uniform float Threshold = 0.45;

uniform vec3 LightPosition;

uniform vec3 DiffuseMaterial;

uniform mat4 Modelview;

uniform mat3 NormalMatrix;

float lookup(vec3 coord)

{

return texture(Volume, coord).x;

}

void main()

{

vec2 coord = 0.5 * (vPosition.xy + 1.0);

vec3 rayStart = texture(RayStart, coord).xyz;

vec3 rayStop = texture(RayStop, coord).xyz;

if (rayStart == rayStop) {

discard;

return;

}

vec3 ray = rayStop - rayStart;

float rayLength = length(ray);

vec3 stepVector = StepLength * ray / rayLength;

vec3 pos = rayStart;

vec4 dst = vec4(0);

while (dst.a < 1 && rayLength > 0) {

float V = lookup(pos);

if (V > Threshold) {

float L = StepLength;

float E = lookup(pos + vec3(L,0,0));

float N = lookup(pos + vec3(0,L,0));

float U = lookup(pos + vec3(0,0,L));

vec3 normal = normalize(NormalMatrix * vec3(E - V, N - V, U - V));

vec3 light = LightPosition;

float df = abs(dot(normal, light));

vec3 color = df * DiffuseMaterial;

vec4 src = vec4(color, 1.0);

dst = (1.0 - dst.a) * src + dst;

break;

}

pos += stepVector;

rayLength -= StepLength;

}

FragColor = dst;

}

Reducing Slice Artifacts

When writing your first volume renderer, you’ll undoubtedly come across the scourge of “wood grain” artifacts; your data will look like it’s made up of a stack of slices (which it is!). Obviously, reducing the raycast step size can help with this, but doing so can be detrimental to performance.

There are a couple popular tricks that can help: (1) re-checking the “solidity” of the voxel by jumping around at half-step intervals, and (2) jittering the ray’s starting position along the view direction. I added both of these tricks into our fragment shader; they’re highlighted in gray here:

// ...same as before...

uniform sampler2D Noise;

void main()

{

// ...same as before...

rayStart += stepVector * texture(Noise, gl_FragCoord.xy / 256).r;

vec3 pos = rayStart;

vec4 dst = vec4(0);

while (dst.a < 1 && rayLength > 0) {

float V = lookup(pos);

if (V > Threshold) {

vec3 s = -stepVector * 0.5;

pos += s; V = lookup(pos);

if (V > Threshold) s *= 0.5; else s *= -0.5;

pos += s; V = lookup(pos);

if (V > Threshold) {

// ...same as before...

}

}

pos += stepVector;

rayLength -= StepLength;

}

FragColor = dst;

}

3D Gaussian Splat

Now that we’ve covered the basics of volume rendering, let’s come back to the main subject of this article, which deals with the generation of volumetric data using Gaussian splats.

One approach would be evaluating the 3D Gaussian function on the CPU during application initialization, and creating a 3D texture from that. However, I find it to be faster to simply compute the Gaussian in real-time, directly in the fragment shader.

Recall that we’re going to use instancing to render all the slices of the splat with only 1 draw call. One awkward aspect of GLSL is that the gl_InstanceID input variable is only accessible from the vertex shader, while the gl_Layer output variable is only accessible from the geometry shader. It’s not difficult to deal with this though! Without further ado, here’s the trinity of shaders for 3D Gaussian splatting:

-- Vertex Shader

in vec4 Position;

out vec2 vPosition;

out int vInstance;

uniform vec4 Center;

void main()

{

gl_Position = Position + Center;

vPosition = Position.xy;

vInstance = gl_InstanceID;

}

-- Geometry Shader

layout(triangles) in;

layout(triangle_strip, max_vertices = 3) out;

in int vInstance[3];

in vec2 vPosition[3];

out vec3 gPosition;

uniform float InverseSize;

void main()

{

gPosition.z = 1.0 - 2.0 * vInstance[0] * InverseSize;

gl_Layer = vInstance[0];

gPosition.xy = vPosition[0];

gl_Position = gl_in[0].gl_Position;

EmitVertex();

gPosition.xy = vPosition[1];

gl_Position = gl_in[1].gl_Position;

EmitVertex();

gPosition.xy = vPosition[2];

gl_Position = gl_in[2].gl_Position;

EmitVertex();

EndPrimitive();

}

-- Fragment Shader

in vec3 gPosition;

out vec3 FragColor;

uniform vec3 Color;

uniform float InverseVariance;

uniform float NormalizationConstant;

void main()

{

float r2 = dot(gPosition, gPosition);

FragColor = Color * NormalizationConstant * exp(r2 * InverseVariance);

}

Setting up a 3D texture as a render target might be new to you; here’s one way you could set up the FBO: (note that I’m calling glFramebufferTexture rather than glFramebufferTexture{2,3}D)

struct Volume {

GLuint FboHandle;

GLuint TextureHandle;

};

Volume CreateVolume(GLsizei width, GLsizei height, GLsizei depth)

{

Volume volume;

glGenFramebuffers(1, &volume.FboHandle);

glBindFramebuffer(GL_FRAMEBUFFER, volume.FboHandle);

GLuint textureHandle;

glGenTextures(1, &textureHandle);

glBindTexture(GL_TEXTURE_3D, textureHandle);

glTexParameteri(GL_TEXTURE_3D, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_3D, GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_3D, GL_TEXTURE_WRAP_R, GL_CLAMP_TO_EDGE);

glTexParameteri(GL_TEXTURE_3D, GL_TEXTURE_MIN_FILTER, GL_LINEAR);

glTexParameteri(GL_TEXTURE_3D, GL_TEXTURE_MAG_FILTER, GL_LINEAR);

glTexImage3D(GL_TEXTURE_3D, 0, GL_RGB16F, width, height, depth, 0,

GL_RGB, GL_HALF_FLOAT, 0);

volume.TextureHandle = textureHandle;

GLint miplevel = 0;

GLuint colorbuffer;

glGenRenderbuffers(1, &colorbuffer);

glBindRenderbuffer(GL_RENDERBUFFER, colorbuffer);

glFramebufferTexture(GL_FRAMEBUFFER, GL_COLOR_ATTACHMENT0, textureHandle, miplevel);

return volume;

}

Note that we created a half-float RGB texture for volume rendering — this might seem like egregious usage of memory, but keep in mind that our end goal is to create a field of velocity vectors.

Flowline Extrusion

Now that we have the splat shaders ready, we can write the C++ code that extrudes a vector of positions into a velocity field. It works by looping over the positions in the path, computing the velocity at that point, and splatting the velocity. The call to glDrawArraysInstanced is simply rendering a quad with the instance count set to the depth of the splat.

typedef std::vector<VectorMath::Point3> PointList;

glEnable(GL_BLEND);

glBlendFunc(GL_ONE, GL_ONE);

PointList::const_iterator i = positions.begin();

for (; i != positions.end(); ++i) {

PointList::const_iterator next = i;

if (++next == positions.end())

next = positions.begin();

VectorMath::Vector3 velocity = (*next - *i);

GLint center = glGetUniformLocation(program, "Center");

glUniform4f(center, i->getX(), i->getY(), i->getZ(), 0);

GLint color = glGetUniformLocation(program, "Color");

glUniform3fv(color, 1, (float*) &velocity);

glDrawArraysInstanced(GL_TRIANGLE_STRIP, 0, 4, Size);

}

Blending is essential for this to work correctly. If you want to create a true distance field, you’d want to use GL_MAX blending rather than the default blending equation (which is GL_FUNC_ADD), and you’d want your fragment shader to use evaluate a linear falloff rather than the Gaussian function.

Velocity Visualization

One popular way to visualize a grid of velocities is via short lines with alpha gradients, as in the image to the right (click to enlarge). This technique is easy to implement with modern OpenGL. Simply populate a VBO with a single point per grid cell, then use the geometry shader to extrude each point into a short line segment whose length and direction reflects the velocity vector in that cell. It’s rather beautiful actually! Here’s the shader triplet:

-- Vertex Shader

in vec4 Position;

out vec4 vPosition;

uniform mat4 ModelviewProjection;

void main()

{

gl_Position = ModelviewProjection * Position;

vPosition = Position;

}

-- Geometry Shader

layout(points) in;

layout(line_strip, max_vertices = 2) out;

out float gAlpha;

uniform mat4 ModelviewProjection;

in vec4 vPosition[1];

uniform sampler3D Volume;

void main()

{

vec3 coord = 0.5 * (vPosition[0].xyz + 1.0);

vec4 V = vec4(texture(Volume, coord).xyz, 0.0);

gAlpha = 0;

gl_Position = gl_in[0].gl_Position;

EmitVertex();

gAlpha = 1;

gl_Position = ModelviewProjection * (vPosition[0] + V);

EmitVertex();

EndPrimitive();

}

-- Fragment Shader

out vec4 FragColor;

in float gAlpha;

uniform float Brightness = 0.5;

void main()

{

FragColor = Brightness * vec4(gAlpha, 0, 0, 1);

}

Downloads

The demo code uses a subset of the Pez ecosystem, which is included in the zip below. It uses CMake for the build system.

I consider this code to be on the public domain. Enjoy!

Simple Fluid Simulation

I finally wrote my first fluid simulation: two-dimensional smoke advected with GLSL fragment shaders. It was great fun, but let me warn you: it’s all too easy to drain away vast swaths of your life while tuning the millions of various parameters, just to get the right effect. It’s also rather addictive.

For my implementation, I used the classic Mark Harris article from GPU Gems 1 as my trusty guide. His article is available online here. Ah, 2004 seems like it was only yesterday…

Mark’s article is about a method called Eulerian Grid. In general, fluid simulation algorithms can be divided into three categories:

- Eulerian

-

Divides a cuboid of space into cells. Each cell contains a velocity vector and other information, such as density and temperature.

- Lagrangian

-

Particle-based physics, not as effective as Eulerian Grid for modeling “swirlies”. However, particles are much better for expansive regions, since they aren’t restricted to a grid.

- Hybrid

-

For large worlds that have specific regions where swirlies are desirable, use Lagrangian everywhere, but also place Eulerian grids in the regions of interest. When particles enter those regions, they become influenced by the grid’s velocity vectors. Jonathan Cohen has done some interesting work in this area.

Regardless of the method, the Navier-Stokes equation is at the root of it all. I won’t cover it here since you can read about it from a trillion different sources, all of which are far more authoritative than this blog. I’m focusing on implementation.

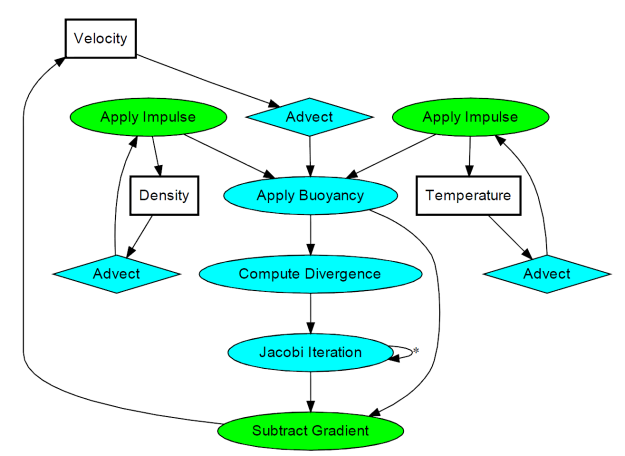

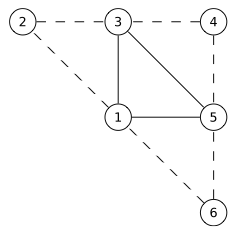

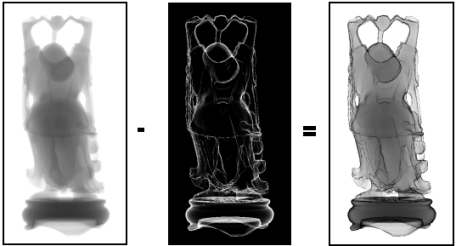

After reading Mark’s article, I found it useful to create a quick graphviz diagram for all the image processing:

It’s not as complicated as it looks. The processing stages are all drawing full-screen quads with surprisingly simple fragment shaders. There are a total of three floating-point surfaces being processed: Velocity (a 2-component texture), Density (a 1-component texture), and Temperature (another 1-component texture).

In practice, you’ll need six surfaces instead of three; this allows ping-ponging between render targets and source textures. In some cases you can use blending instead; those stages are shown in green.

The processing stages are:

- Advect

-

Copies a quantity from a neighboring cell into the current cell; projects the current velocity backwards to find the incoming value. This is used for any type of quantity, including density, temperature, and velocity itself.

- Apply Impulse

-

This stage accounts for external forces, such as user interaction or the immortal candle in my simulation.

- Apply Buoyancy

-

For smoke effects, temperature can influence velocity by making it rise. In my implementation, I also apply the weight of the smoke in this stage; high densities in cool regions will sink.

- Compute Divergence

-

This stage computes values for a temporary surface (think of it as “scratch space”) that’s required for computing the pressure component of the Navier-Stokes equation.

- Jacobi Iteration

-

This is the real meat of the algorithm; it requires many iterations to converge to a good pressure value. The number of iterations is one of the many tweakables that I referred to at the beginning of this post, and I found that ~40 iterations was a reasonable number.

- Subtract Gradient

-

In this stage, the gradient of the pressure gets subtracted from velocity.

The above list is by no means set in stone — there are many ways to create a fluid simulation. For example, the Buoyancy stage is not necessary for liquids. Also, many simulations have a Vorticity Confinement stage to better preserve curliness, which I decided to omit. I also left out a Viscous Diffusion stage, since it’s not very useful for smoke.

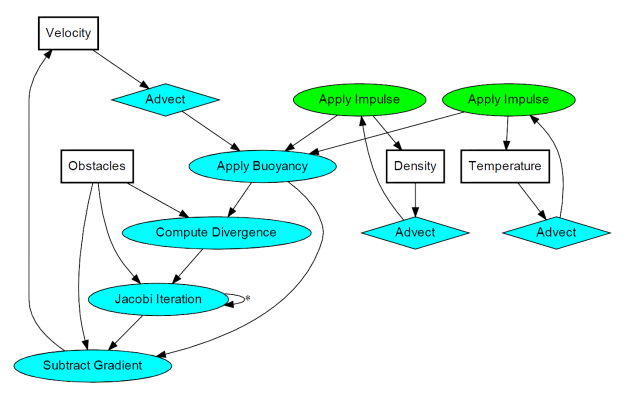

Dealing with obstacles is tricky. One way of enforcing boundary conditions is adding a new operation after every processing stage. The new operation executes a special draw call that only touches the pixels that need to be tweaked to keep Navier-Stokes happy.

Alternatively, you can perform boundary enforcement within your existing fragment shaders. This adds costly texture lookups, but makes it easier to handle dynamic boundaries, and it simplifies your top-level image processing logic. Here’s the new diagram that takes obstacles into account: (alas, we can no longer use blending for SubtractGradient)

Note that I added a new surface called Obstacles. It has three components: the red component is essentially a boolean for solid versus empty, and the green/blue channels represent the obstacle’s velocity.

For my C/C++ code, I defined tiny POD structures for the various surfaces, and simple functions for each processing stage. This makes the top-level rendering routine easy to follow:

struct Surface {

GLuint FboHandle;

GLuint TextureHandle;

int NumComponents;

};

struct Slab {

Surface Ping;

Surface Pong;

};

Slab Velocity, Density, Pressure, Temperature;

Surface Divergence, Obstacles;

// [snip]

glViewport(0, 0, GridWidth, GridHeight);

Advect(Velocity.Ping, Velocity.Ping, Obstacles, Velocity.Pong, VelocityDissipation);

SwapSurfaces(&Velocity);

Advect(Velocity.Ping, Temperature.Ping, Obstacles, Temperature.Pong, TemperatureDissipation);

SwapSurfaces(&Temperature);

Advect(Velocity.Ping, Density.Ping, Obstacles, Density.Pong, DensityDissipation);

SwapSurfaces(&Density);

ApplyBuoyancy(Velocity.Ping, Temperature.Ping, Density.Ping, Velocity.Pong);

SwapSurfaces(&Velocity);

ApplyImpulse(Temperature.Ping, ImpulsePosition, ImpulseTemperature);

ApplyImpulse(Density.Ping, ImpulsePosition, ImpulseDensity);

ComputeDivergence(Velocity.Ping, Obstacles, Divergence);

ClearSurface(Pressure.Ping, 0);

for (int i = 0; i < NumJacobiIterations; ++i) {

Jacobi(Pressure.Ping, Divergence, Obstacles, Pressure.Pong);

SwapSurfaces(&Pressure);

}

SubtractGradient(Velocity.Ping, Pressure.Ping, Obstacles, Velocity.Pong);

SwapSurfaces(&Velocity);

For my full source code, you can download the zip at the end of this article, but I’ll go ahead and give you a peek at the fragment shader for one of the processing stages. Like I said earlier, these shaders are mathematically simple on their own. I bet most of the performance cost is in the texture lookups, not the math. Here’s the shader for the Advect stage:

out vec4 FragColor;

uniform sampler2D VelocityTexture;

uniform sampler2D SourceTexture;

uniform sampler2D Obstacles;

uniform vec2 InverseSize;

uniform float TimeStep;

uniform float Dissipation;

void main()

{

vec2 fragCoord = gl_FragCoord.xy;

float solid = texture(Obstacles, InverseSize * fragCoord).x;

if (solid > 0) {

FragColor = vec4(0);

return;

}

vec2 u = texture(VelocityTexture, InverseSize * fragCoord).xy;

vec2 coord = InverseSize * (fragCoord - TimeStep * u);

FragColor = Dissipation * texture(SourceTexture, coord);

}

Downloads

The demo code uses a subset of the Pez ecosystem, which is included in the zip below. It can be built with Visual Studio 2010 or gcc. For the latter, I provided a WAF script instead of a makefile.

The demo code uses a subset of the Pez ecosystem, which is included in the zip below. It can be built with Visual Studio 2010 or gcc. For the latter, I provided a WAF script instead of a makefile.

I consider this code to be on the public domain. Enjoy!

Path Instancing

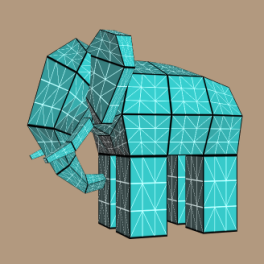

Instancing and skinning are like peas and carrots. But remember, skinning isn’t the only way to deform a mesh on the GPU. In some situations, (for example, rendering aquatic life), a bone system can be overkill. If you’ve got a predefined curve in 3-space, it’s easy to write a vertex shader that performs path-based deformation.

Path-based deformation is obviously more limited than true skinning, but gives you the same performance benefits (namely, your mesh lives in an immutable VBO in graphics memory), and it is simpler to set up; no fuss over bone matrices.

Ideally your curve has two sets of data points: a set of node centers and a set of orientation vectors. Your first thought might be to store them in an array of shader uniforms, but don’t forget that modern vertex shaders can make texture lookups. It just so happens that these two sets of data can be nicely represented by a pair of rows in an RGB floating-point texture, like so:

Here’s the beauty part: by creating your texture with a GL_LINEAR filter, you’ll be able to leverage dedicated interpolation hardware to obtain points (and orientation vectors) that live between the node centers. (Component-wise lerping of orientation vectors isn’t quite mathematically kosher, but who’s watching?)

But wait, there’s more! Even the wrap modes of your texture will come in handy. If your 3-space curve is a closed loop, you can use GL_REPEAT for your S coordinate, and voilà — your shader need not worry its little head about cylindrical wrapping!

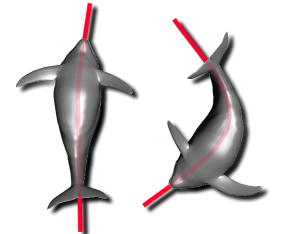

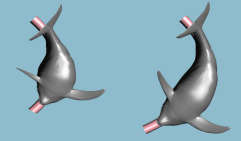

One gotcha with paths is that you’ll want to enforce an even spacing between nodes; this helps keep your shader simple. If your shader assumes a uniform distribution of path nodes, your models can become alarmingly foreshortened. For example, here’s a dolphin that’s tied to an elliptical path, before and after node re-distribution:

Now, without further ado, here’s my vertex shader. Note the complete lack of trig functions; I can’t tell you how many times I’ve seen graphics code that makes costly sine and cosine calls when simple linear algebra will suffice. I’m using old-school GLSL (e.g., attribute instead of in) to make my demo more amenable to Mac OS X.

attribute vec3 Position;

uniform mat4 ModelviewProjection;

uniform sampler2D Sampler;

uniform float TextureHeight;

uniform float InverseWidth;

uniform float InverseHeight;

uniform float PathOffset;

uniform float PathScale;

uniform int InstanceOffset;

void main()

{

float id = gl_InstanceID + InstanceOffset;

float xstep = InverseWidth;

float ystep = InverseHeight;

float xoffset = 0.5 * xstep;

float yoffset = 0.5 * ystep;

// Look up the current and previous centerline positions:

vec2 texCoord;

texCoord.x = PathScale * Position.x + PathOffset + xoffset;

texCoord.y = 2.0 * id / TextureHeight + yoffset;

vec3 currentCenter = texture2D(Sampler, texCoord).rgb;

vec3 previousCenter = texture2D(Sampler, texCoord - vec2(xstep, 0)).rgb;

// Next, compute the path direction vector. Note that this

// can be optimized by removing the normalize, if you know the node spacing.

vec3 pathDirection = normalize(currentCenter - previousCenter);

// Look up the current orientation vector:

texCoord.x = PathOffset + xoffset;

texCoord.y = texCoord.y + ystep;

vec3 pathNormal = texture2D(Sampler, texCoord).rgb;

// Form the change-of-basis matrix:

vec3 a = pathDirection;

vec3 b = pathNormal;

vec3 c = cross(a, b);

mat3 basis = mat3(a, b, c);

// Transform the positions:

vec3 spoke = vec3(0, Position.yz);

vec3 position = currentCenter + basis * spoke;

gl_Position = ModelviewProjection * vec4(position, 1);

}

The shader assumes that the undeformed mesh sits at the origin, with its spine aligned to the X axis. The way it works is this: first, compute the three basis vectors for a new coordinate system defined by the path segment. Next, place the basis vectors into a 3×3 matrix. Finally, apply the 3×3 matrix to the spoke vector, which goes from the mesh’s spine out to the current mesh vertex. Easy!

By the way, don’t feel ashamed if you’ve never made an instanced draw call with OpenGL before. It’s a relatively new feature that was added to the core in OpenGL 3.1. Before that, it was known as GL_ARB_draw_instanced. At the time of this writing, it’s still not supported on Mac OS X. Here’s how you do it with an indexed array:

glDrawElementsInstanced(GL_TRIANGLES, faceCount*3, GL_UNSIGNED_INT, 0, instanceCount);

When making this call, OpenGL automatically sets up the gl_InstanceID variable, which can be accessed from your vertex shader. Simple!

Downloads

The demo code uses a subset of the Pez ecosystem, which is included in the zip below. It uses a cool python-based build system called WAF. I tested it in a MinGW environment on a Windows 7 machine.

I consider this code to be on the public domain. Here’s a little video of the demo:

Silhouette Extraction

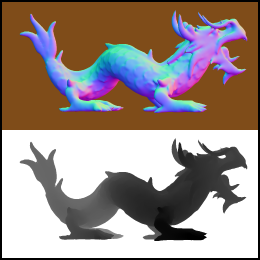

Some of my previous entries used the geometry shader (GS) to highlight certain triangle edges by passing new information to the fragment shader. The GS can also be used generate long, thin quads along those edges; this lets you apply texturing for sketchy effects. In the case of silhouette lines, you can create an anti-aliased border along the boundary of the model, without the cost of true multisampling.

In this article, I’ll show how to generate silhouettes using GLSL geometry shaders. At the end of the article, I provide the complete demo code for drawing the dragon depicted here. I tested the demo with Ubuntu (gcc), and Windows (Visual Studio 2010).

Old School Silhouettes

Just for fun, I want to point out a classic two-pass method for generating silhouettes, used in the days before shaders. I wouldn’t recommend it nowadays; it does not highlight creases and “core” OpenGL no longer supports smooth/wide lines anyway. This technique can be found in Under the Shade of the Rendering Tree by John Lander:

- Draw front faces:

- glPolygonMode(GL_FRONT, GL_FILL)

- glDepthFunc(GL_LESS)

- Draw back faces:

- glPolygonMode(GL_BACK, GL_LINE)

- glDepthFunc(GL_LEQUAL)

Ah, brings back memories…

Computing Adjacency

To detect silhouettes and creases, the GS examines the facet normals of adjacent triangles. So, we’ll need to send down the verts using GL_TRIANGLES_ADJACENCY:

glDrawElements(GL_TRIANGLES_ADJACENCY, // primitive type

triangleCount*6, // index count

GL_UNSIGNED_SHORT, // index type

0); // start index

Six verts per triangle seems like an egregious redundancy of data, but keep in mind that it enlarges your index VBO, not your attributes VBO.

Typical mesh files don’t include adjacency information, but it’s easy enough to compute in your application. A wonderfully simple data structure for algorithms like this is the Half-Edge Table.

For low-level mesh algorithms, I enjoy using C99 rather than C++. I find that avoiding STL makes life a bit easier when debugging, and it encourages me to use memory efficiently. Here’s a half-edge structure in C:

typedef struct HalfEdgeRec

{

unsigned short Vert; // Vertex index at the end of this half-edge

struct HalfEdgeRec* Twin; // Oppositely oriented adjacent half-edge

struct HalfEdgeRec* Next; // Next half-edge around the face

} HalfEdge;