Archive for the ‘C++’ Category

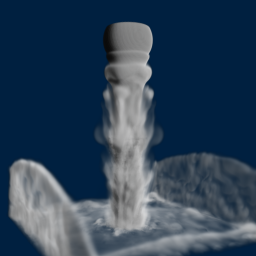

3D Eulerian Grid

I finally extended my OpenGL-based Eulerian fluid simulation to 3D. It’s not as pretty as Miles Macklin’s stuff (here), but at least it runs at interactive rates on my GeForce GTS 450, which isn’t exactly top-of-the-line. My original blog entry, “Simple Fluid Simulation”, goes into much more detail than this post.

I thought it would be fun to invert buoyancy and gravity to make it look more like liquid nitrogen than smoke. The source code can be downloaded at the end of this post.

Extending to three dimensions was fairly straightforward. I’m still using a half-float format for my velocity texture, but I’ve obviously made it 3D and changed its format to GL_RGB16F (previously it was GL_RG16F).

The volumetric image processing is achieved by ping-ponging layered FBOs, using instanced rendering to update all slices of a volume with a single draw call and a tiny 4-vert VBO, like this:

glDrawArraysInstanced(GL_TRIANGLE_STRIP, 0, 4, numLayers);

Here are the vertex and geometry shaders that I use to make this work:

-- Vertex Shader

in vec4 Position;

out int vInstance;

void main()

{

gl_Position = Position;

vInstance = gl_InstanceID;

}

-- Geometry Shader

layout(triangles) in;

layout(triangle_strip, max_vertices = 3) out;

in int vInstance[3];

out float gLayer;

uniform float InverseSize;

void main()

{

gl_Layer = vInstance[0];

gLayer = float(gl_Layer) + 0.5;

gl_Position = gl_in[0].gl_Position;

EmitVertex();

gl_Position = gl_in[1].gl_Position;

EmitVertex();

gl_Position = gl_in[2].gl_Position;

EmitVertex();

EndPrimitive();

}

Note that I offset the layer by 0.5. I’m too lazy to pass the entire texture coordinate to the fragment shader, so my fragment shader uses a gl_FragCoord trick to get to the right texture coordinate:

-- Fragment Shader

uniform vec3 InverseSize;

uniform sampler3D VelocityTexture;

void main()

{

vec3 tc = InverseSize * vec3(gl_FragCoord.xy, gLayer);

vec3 velocity = texture(VelocityTexture, tc).xyz;

// ...

}

Since the fragment shader gets evaluated at pixel centers, I needed the 0.5 offset on the layer coordinate to prevent the fluid from “drifting” along the Z axis.

The fragment shaders are otherwise pretty damn similar to their 2D counterparts. As an example, I’ll show you how I extended the SubtractGradient shader, which computes the gradient vector for pressure. Previously it did something like this:

float N = texelFetchOffset(Pressure, T, 0, ivec2(0, 1)).r; float S = texelFetchOffset(Pressure, T, 0, ivec2(0, -1)).r; float E = texelFetchOffset(Pressure, T, 0, ivec2(1, 0)).r; float W = texelFetchOffset(Pressure, T, 0, ivec2(-1, 0)).r; vec2 oldVelocity = texelFetch(Velocity, T, 0).xy; vec2 gradient = vec2(E - W, N - S) * GradientScale; vec2 newVelocity = oldVelocity - gradient;

The new code does something like this:

float N = texelFetchOffset(Pressure, T, 0, ivec3(0, 1, 0)).r; float S = texelFetchOffset(Pressure, T, 0, ivec3(0, -1, 0)).r; float E = texelFetchOffset(Pressure, T, 0, ivec3(1, 0, 0)).r; float W = texelFetchOffset(Pressure, T, 0, ivec3(-1, 0, 0)).r; float U = texelFetchOffset(Pressure, T, 0, ivec3(0, 0, 1)).r; float D = texelFetchOffset(Pressure, T, 0, ivec3(0, 0, -1)).r; vec3 oldVelocity = texelFetch(Velocity, T, 0).xyz; vec3 gradient = vec3(E - W, N - S, U - D) * GradientScale; vec3 newVelocity = oldVelocity - gradient;

Simple!

Downloads

I’ve tested the code with Visual Studio 2010. It uses CMake for the build system. If you have trouble building it or running it, let me warn you that you need a decent graphics card and decent OpenGL drivers.

I consider this code to be on the public domain. Enjoy!

Tron, Volumetric Lines, and Meshless Tubes

With Tron: Legacy hitting theaters, I thought it’d be fun to write a post on volumetric line strips. They come in handy for a variety of effects (lightsabers, lightning, particle traces, etc.). In many cases, you can render volumetric lines by drawing thin lines into an offscreen surface, then blurring the entire surface. However, in some cases, screen-space blur won’t work out for you. Maybe you’re fill-rate bound, or maybe you need immediate depth-testing. You’d prefer a single-pass solution, and you want your volumetric lines to look great even when the lines are aligned to the viewing direction.

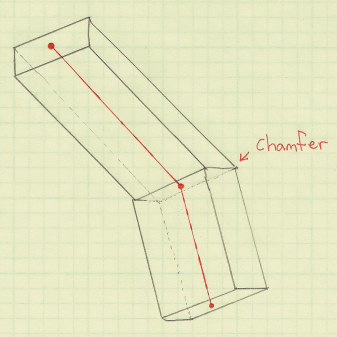

Geometry shaders to the rescue! By having the geometry shader emit a cuboid for each line segment, the fragment shader can perform a variety of effects within the screen-space region defined by the projected cuboid. This includes Gaussian splatting, alpha texturing, or even simple raytracing of cylinders or capsules. I stole the general idea from Sébastien Hillaire.

You can apply this technique to line strip primitives with or without adjacency. If you include adjacency, your geometry shader can chamfer the ends of the cuboid, turning the cuboid into a general prismoid and creating a tighter screen-space region.

I hate bloating vertex buffers with adjacency information. However, with GL_LINE_STRIP_ADJACENCY, adjacency incurs very little cost: simply add an extra vert to the beginning and end of your vertex buffer and you’re done! For more on adjacency, see my post on silhouettes or this interesting post on avoiding adjacency for terrain silhouettes.

For line strips, you might want to use GL_MAX blending rather than simple additive blending. This makes it easy to avoid extra glow at the joints.

Here’s a simplified version of the geometry shader, using old-school GLSL syntax to make Apple fans happy:

uniform mat4 ModelviewProjection;

varying in vec3 vPosition[4]; // Four inputs since we're using GL_LINE_STRIP_ADJACENCY

varying in vec3 vNormal[4]; // Orientation vectors are necessary for consistent alignment

vec4 prismoid[8]; // Scratch space for the eight corners of the prismoid

void emit(int a, int b, int c, int d)

{

gl_Position = prismoid[a]; EmitVertex();

gl_Position = prismoid[b]; EmitVertex();

gl_Position = prismoid[c]; EmitVertex();

gl_Position = prismoid[d]; EmitVertex();

EndPrimitive();

}

void main()

{

// Compute orientation vectors for the two connecting faces:

vec3 p0, p1, p2, p3;

p0 = vPosition[0]; p1 = vPosition[1];

p2 = vPosition[2]; p3 = vPosition[3];

vec3 n0 = normalize(p1-p0);

vec3 n1 = normalize(p2-p1);

vec3 n2 = normalize(p3-p2);

vec3 u = normalize(n0+n1);

vec3 v = normalize(n1+n2);

// Declare scratch variables for basis vectors:

vec3 i,j,k; float r = Radius;

// Compute face 1 of 2:

j = u; i = vNormal[1]; k = cross(i, j); i *= r; k *= r;

prismoid[0] = ModelviewProjection * vec4(p1 + i + k, 1);

prismoid[1] = ModelviewProjection * vec4(p1 + i - k, 1);

prismoid[2] = ModelviewProjection * vec4(p1 - i - k, 1);

prismoid[3] = ModelviewProjection * vec4(p1 - i + k, 1);

// Compute face 2 of 2:

j = v; i = vNormal[2]; k = cross(i, j); i *= r; k *= r;

prismoid[4] = ModelviewProjection * vec4(p2 + i + k, 1);

prismoid[5] = ModelviewProjection * vec4(p2 + i - k, 1);

prismoid[6] = ModelviewProjection * vec4(p2 - i - k, 1);

prismoid[7] = ModelviewProjection * vec4(p2 - i + k, 1);

// Emit the six faces of the prismoid:

emit(0,1,3,2); emit(5,4,6,7);

emit(4,5,0,1); emit(3,2,7,6);

emit(0,3,4,7); emit(2,1,6,5);

}

Glow With Point-Line Distance

Here’s my fragment shader for Tron-like glow. For this to link properly, the geometry shader needs to output some screen-space coordinates for the nodes in the line strip (gEndpoints[2] and gPosition). The fragment shader simply computes a point-line distance, using the result for the pixel’s intensity. It’s actually computing distance as “point-to-segment” rather than “point-to-infinite-line”. If you prefer the latter, you might be able to make an optimization by moving the distance computation up into the geometry shader.

uniform vec4 Color;

varying vec2 gEndpoints[2];

varying vec2 gPosition;

uniform float Radius;

uniform mat4 Projection;

// Return distance from point 'p' to line segment 'a b':

float line_distance(vec2 p, vec2 a, vec2 b)

{

float dist = distance(a,b);

vec2 v = normalize(b-a);

float t = dot(v,p-a);

vec2 spinePoint;

if (t > dist) spinePoint = b;

else if (t > 0.0) spinePoint = a + t*v;

else spinePoint = a;

return distance(p,spinePoint);

}

void main()

{

float d = line_distance(gPosition, gEndpoints[0], gEndpoints[1]);

gl_FragColor = vec4(vec3(1.0 - 12.0 * d), 1.0);

}

Raytraced Cylindrical Imposters

I’m not sure how useful this is, but you can actually go a step further and perform a ray-cylinder intersection test in your fragment shader and use the surface normal to perform lighting. The result: triangle-free tubes! By writing to the gl_FragDepth variable, you can enable depth-testing, and your tubes integrate into the scene like magic; no tessellation required. Here’s an excerpt from my fragment shader. (For the full shader, download the source code at the end of the article.)

vec3 perp(vec3 v)

{

vec3 b = cross(v, vec3(0, 0, 1));

if (dot(b, b) < 0.01)

b = cross(v, vec3(0, 1, 0));

return b;

}

bool IntersectCylinder(vec3 origin, vec3 dir, out float t)

{

vec3 A = gEndpoints[1]; vec3 B = gEndpoints[2];

float Epsilon = 0.0000001;

float extent = distance(A, B);

vec3 W = (B - A) / extent;

vec3 U = perp(W);

vec3 V = cross(U, W);

U = normalize(cross(V, W));

V = normalize(V);

float rSqr = Radius*Radius;

vec3 diff = origin - 0.5 * (A + B);

mat3 basis = mat3(U, V, W);

vec3 P = diff * basis;

float dz = dot(W, dir);

if (abs(dz) >= 1.0 - Epsilon) {

float radialSqrDist = rSqr - P.x*P.x - P.y*P.y;

if (radialSqrDist < 0.0)

return false;

t = (dz > 0.0 ? -P.z : P.z) + extent * 0.5;

return true;

}

vec3 D = vec3(dot(U, dir), dot(V, dir), dz);

float a0 = P.x*P.x + P.y*P.y - rSqr;

float a1 = P.x*D.x + P.y*D.y;

float a2 = D.x*D.x + D.y*D.y;

float discr = a1*a1 - a0*a2;

if (discr < 0.0)

return false;

if (discr > Epsilon) {

float root = sqrt(discr);

float inv = 1.0/a2;

t = (-a1 + root)*inv;

return true;

}

t = -a1/a2;

return true;

}

Motion-Blurred Billboards

Emitting cuboids from the geometry shader are great fun, but they’re overkill for many tasks. If you want to render short particle traces, it’s easier just to emit a quad from your geometry shader. The quad can be oriented and stretched according to the screen-space projection of the particle’s velocity vector. The tricky part is handling degenerate conditions: velocity might be close to zero, or velocity might be Z-aligned.

One technique to deal with this is to interpolate the emitted quad between a vertically-aligned square and a velocity-aligned rectangle, and basing the lerping factor on the magnitude of the velocity vector projected into screen-space.

Here’s the full geometry shader and fragment shader for motion-blurred particles. The geometry shader receives two endpoints of a line segment as input, and uses these to determine velocity.

-- Geometry Shader

varying in vec3 vPosition[2];

varying out vec2 gCoord;

uniform mat4 ModelviewProjection;

uniform float Radius;

uniform mat3 Modelview;

uniform mat4 Projection;

uniform float Time;

float Epsilon = 0.001;

void main()

{

vec3 p = mix(vPosition[0], vPosition[1], Time);

float w = Radius * 0.5;

float h = w * 2.0;

vec3 u = Modelview * (vPosition[1] - vPosition[0]);

// Determine 't', which represents Z-aligned magnitude.

// By default, t = 0.0.

// If velocity aligns with Z, increase t towards 1.0.

// If total speed is negligible, increase t towards 1.0.

float t = 0.0;

float nz = abs(normalize(u).z);

if (nz > 1.0 - Epsilon)

t = (nz - (1.0 - Epsilon)) / Epsilon;

else if (dot(u,u) < Epsilon)

t = (Epsilon - dot(u,u)) / Epsilon;

// Compute screen-space velocity:

u.z = 0.0;

u = normalize(u);

// Lerp the orientation vector if screen-space velocity is negligible:

u = normalize(mix(u, vec3(1,0,0), t));

h = mix(h, w, t);

// Compute the change-of-basis matrix for the billboard:

vec3 v = vec3(-u.y, u.x, 0);

vec3 a = u * Modelview;

vec3 b = v * Modelview;

vec3 c = cross(a, b);

mat3 basis = mat3(a, b, c);

// Compute the four offset vectors:

vec3 N = basis * vec3(0,w,0);

vec3 S = basis * vec3(0,-w,0);

vec3 E = basis * vec3(-h,0,0);

vec3 W = basis * vec3(h,0,0);

// Emit the quad:

gCoord = vec2(1,1); gl_Position = ModelviewProjection * vec4(p+N+E,1); EmitVertex();

gCoord = vec2(-1,1); gl_Position = ModelviewProjection * vec4(p+N+W,1); EmitVertex();

gCoord = vec2(1,-1); gl_Position = ModelviewProjection * vec4(p+S+E,1); EmitVertex();

gCoord = vec2(-1,-1); gl_Position = ModelviewProjection * vec4(p+S+W,1); EmitVertex();

EndPrimitive();

}

-- Fragment Shader

varying vec2 gCoord;

void main()

{

float r2 = dot(gCoord, gCoord);

float d = exp(r2 * -1.2); // Gaussian Splat

gl_FragColor = vec4(vec3(d), 1.0);

}

Diversion: Hilbert Cubed Sphere

You might’ve noticed the interesting path that I’ve used for my examples. This is a Hilbert curve drawn on the surface of a cube, with the cube deformed into a sphere. If you think of a Hilbert curve as a parametric function from R1 to R2, then any two points that are reasonably close in R1 are also reasonably close in R2. Cool huh? Here’s some C++ code for how I constructed the vertex buffer for the Hilbert cube:

struct Turtle {

void Move(float dx, float dy, bool changeFace = false) {

if (changeFace) ++Face;

switch (Face) {

case 0: P += Vector3(dx, dy, 0); break;

case 1: P += Vector3(0, dy, dx); break;

case 2: P += Vector3(-dy, 0, dx); break;

case 3: P += Vector3(0, -dy, dx); break;

case 4: P += Vector3(dy, 0, dx); break;

case 5: P += Vector3(dy, dx, 0); break;

}

HilbertPath.push_back(P);

}

Point3 P;

int Face;

};

static void HilbertU(int level)

{

if (level == 0) return;

HilbertD(level-1); HilbertTurtle.Move(0, -dist);

HilbertU(level-1); HilbertTurtle.Move(dist, 0);

HilbertU(level-1); HilbertTurtle.Move(0, dist);

HilbertC(level-1);

}

static void HilbertD(int level)

{

if (level == 0) return;

HilbertU(level-1); HilbertTurtle.Move(dist, 0);

HilbertD(level-1); HilbertTurtle.Move(0, -dist);

HilbertD(level-1); HilbertTurtle.Move(-dist, 0);

HilbertA(level-1);

}

static void HilbertC(int level)

{

if (level == 0) return;

HilbertA(level-1); HilbertTurtle.Move(-dist, 0);

HilbertC(level-1); HilbertTurtle.Move(0, dist);

HilbertC(level-1); HilbertTurtle.Move(dist, 0);

HilbertU(level-1);

}

static void HilbertA(int level)

{

if (level == 0) return;

HilbertC(level-1); HilbertTurtle.Move(0, dist);

HilbertA(level-1); HilbertTurtle.Move(-dist, 0);

HilbertA(level-1); HilbertTurtle.Move(0, -dist);

HilbertD(level-1);

}

void CreateHilbertCube(int lod)

{

HilbertU(lod); HilbertTurtle.Move(dist, 0, true);

HilbertU(lod); HilbertTurtle.Move(0, dist, true);

HilbertC(lod); HilbertTurtle.Move(0, dist, true);

HilbertC(lod); HilbertTurtle.Move(0, dist, true);

HilbertC(lod); HilbertTurtle.Move(dist, 0, true);

HilbertD(lod);

}

Deforming the cube into a sphere is easy: just normalize the positions!

Downloads

I tested the code on Mac OS X and on Windows. It uses CMake for the build system, and I consider the code to be on the public domain. A video of the app is embedded at the bottom of this page. Enjoy!

Silhouette Extraction

Some of my previous entries used the geometry shader (GS) to highlight certain triangle edges by passing new information to the fragment shader. The GS can also be used generate long, thin quads along those edges; this lets you apply texturing for sketchy effects. In the case of silhouette lines, you can create an anti-aliased border along the boundary of the model, without the cost of true multisampling.

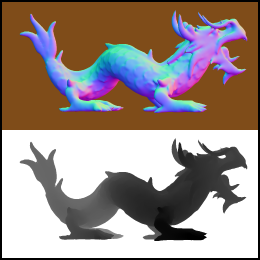

In this article, I’ll show how to generate silhouettes using GLSL geometry shaders. At the end of the article, I provide the complete demo code for drawing the dragon depicted here. I tested the demo with Ubuntu (gcc), and Windows (Visual Studio 2010).

Old School Silhouettes

Just for fun, I want to point out a classic two-pass method for generating silhouettes, used in the days before shaders. I wouldn’t recommend it nowadays; it does not highlight creases and “core” OpenGL no longer supports smooth/wide lines anyway. This technique can be found in Under the Shade of the Rendering Tree by John Lander:

- Draw front faces:

- glPolygonMode(GL_FRONT, GL_FILL)

- glDepthFunc(GL_LESS)

- Draw back faces:

- glPolygonMode(GL_BACK, GL_LINE)

- glDepthFunc(GL_LEQUAL)

Ah, brings back memories…

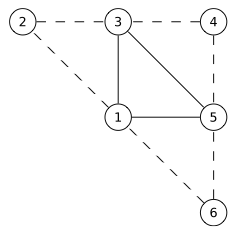

Computing Adjacency

To detect silhouettes and creases, the GS examines the facet normals of adjacent triangles. So, we’ll need to send down the verts using GL_TRIANGLES_ADJACENCY:

glDrawElements(GL_TRIANGLES_ADJACENCY, // primitive type

triangleCount*6, // index count

GL_UNSIGNED_SHORT, // index type

0); // start index

Six verts per triangle seems like an egregious redundancy of data, but keep in mind that it enlarges your index VBO, not your attributes VBO.

Typical mesh files don’t include adjacency information, but it’s easy enough to compute in your application. A wonderfully simple data structure for algorithms like this is the Half-Edge Table.

For low-level mesh algorithms, I enjoy using C99 rather than C++. I find that avoiding STL makes life a bit easier when debugging, and it encourages me to use memory efficiently. Here’s a half-edge structure in C:

typedef struct HalfEdgeRec

{

unsigned short Vert; // Vertex index at the end of this half-edge

struct HalfEdgeRec* Twin; // Oppositely oriented adjacent half-edge

struct HalfEdgeRec* Next; // Next half-edge around the face

} HalfEdge;

If you’ve got a half-edge structure for your mesh, it’s a simple linear-time algorithm to expand an index buffer to include adjacency information. Check out the downloads section to see how I did it.

By the way, you do need an associative array to build a half-edge table when loading model data from a traditional mesh file. In C++, this is all too easy because of helpful libraries like std::hash_map and Google’s Sparse Hash. For C99, I find that Judy arrays provide a compelling way of creating associative arrays.

Basic Fins

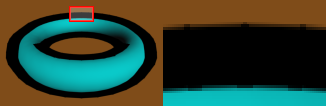

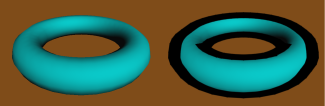

Now that we’ve got preliminaries out of the way, let’s start on the silhouette extrusion algorithm. We’ll start simple and make enhancements in the coming sections. We’ll use this torus as the demo model. The image on the left shows the starting point; the image on the right is our goal.

Silhouette lines occur on the boundary between front-facing triangles and back-facing triangles. Let’s define a function that takes three triangle corners (in screen space), returning true for front-facing triangles. To pull this off, we can take the cross product of two sides. If the Z component of the result is positive, then it’s front-facing. Since we can ignore the X and Y components of the result, this reduces to:

bool IsFront(vec2 A, vec2 B, vec2 C)

{

return 0 < (A.x * B.y - B.x * A.y) + (B.x * C.y - C.x * B.y) + (C.x * A.y - A.x * C.y);

}

Incidentally, the length of the cross-product is equal to twice the area of the triangle. But I digress…

The next function emits a long, thin quadrilateral between two points. To do this, we’ll add an extrusion vector to the two endpoints. In the following snippet, N is the extrusion vector, computed by taking the perpendicular of the normalized vector between the two points, then scaling it by half the desired line width:

uniform float HalfWidth;

void EmitEdge(vec2 P0, vec2 P1)

{

vec2 V = normalize(P1 - P0);

vec2 N = vec2(-V.y, V.x) * HalfWidth;

gl_Position = vec4(P0 - N, 0, 1); EmitVertex();

gl_Position = vec4(P0 + N, 0, 1); EmitVertex();

gl_Position = vec4(P1 - N, 0, 1); EmitVertex();

gl_Position = vec4(P1 + N, 0, 1); EmitVertex();

EndPrimitive();

}

Next let’s write main() for the geometry shader. It checks if the current triangle is frontfacing, then emits a quad for each backfacing neighbor:

layout(triangles_adjacency) in;

layout(triangle_strip, max_vertices = 12) out;

void main()

{

vec2 v0 = gl_in[0].gl_Position.xy;

vec2 v1 = gl_in[1].gl_Position.xy;

vec2 v2 = gl_in[2].gl_Position.xy;

vec2 v3 = gl_in[3].gl_Position.xy;

vec2 v4 = gl_in[4].gl_Position.xy;

vec2 v5 = gl_in[5].gl_Position.xy;

if (IsFront(v0, v2, v4)) {

if (!IsFront(v0, v1, v2)) EmitEdge(v0, v2);

if (!IsFront(v2, v3, v4)) EmitEdge(v2, v4);

if (!IsFront(v0, v4, v5)) EmitEdge(v4, v0);

}

}

Not too pretty. There are three problems with this silhouette:

- The outer edge of the fin needs some antialiasing.

- We’re only seeing the upper half of the fin.

- There’s a gap between neighboring fins.

We’ll address each of these issues in the coming sections.

Antialiased Fins

First let’s add some antialiasing to those fins. To pull this off, we’ll attach a distance-from-center value to each corner of the quad. In the fragment shader, we can use these values to see how far the current pixel is from the edge. If it’s less than a couple pixels away, it fades the alpha value. The EmitEdge function now becomes:

out float gDist;

uniform float HalfWidth;

void EmitEdge(vec2 P0, vec2 P1)

{

vec2 V = normalize(P1 - P0);

vec2 N = vec2(-V.y, V.x) * HalfWidth;

gDist = +HalfWidth;

gl_Position = vec4(P0 - N, 0, 1); EmitVertex();

gDist = -HalfWidth;

gl_Position = vec4(P0 + N, 0, 1); EmitVertex();

gDist = +HalfWidth;

gl_Position = vec4(P1 - N, 0, 1); EmitVertex();

gDist = -HalfWidth;

gl_Position = vec4(P1 + N, 0, 1); EmitVertex();

EndPrimitive();

}

Next is the fragment shader, which uses the tipLength variable to represent the length of the desired alpha gradient. We leverage GLSL’s built-in fwidth function to prevent it from looking fuzzy when zoomed in:

in float gDist;

out vec4 fColor;

uniform float HalfWidth;

const vec3 LineColor = vec3(0, 0, 0);

void main()

{

float alpha = 1.0;

float d = abs(gDist);

float tipLength = 2.0 * fwidth(d);

if (d > HalfWidth - tipLength)

alpha = 1.0 - (d - HalfWidth + tipLength) / tipLength;

fColor = vec4(LineColor, alpha);

}

The Spine Test

We extruded in both directions, so why are we seeing only half of the fin? The issue lies with depth testing. We could simply disable all depth testing during the silhouette pass, but then we’d see creases from the opposite side of the model. The correct trick is to perform depth testing along the centerline of the quad, rather than at the current fragment. This method is called spine testing, and it was introduced by a recent paper from Forrester Cole and Adam Finkelstein.

In order to perform custom depth testing, we’ll need to do an early Z pass. An early Z pass is often useful for other reasons, especially for scenes with high depth complexity. In our demo program, we generate a G-Buffer containing normals and depths:

The normals are used for per-pixel lighting while the depths are used for spine testing. The fragment shader for G-Buffer generation is exceedingly simple; it simply transforms the normals into the [0,1] range and writes them out:

in vec3 vNormal;

out vec3 fColor;

void main()

{

fColor = (vNormal + 1.0) * 0.5;

}

When rendering silhouette lines, the fragment shader will need texture coordinates for the spine. The geometry shader comes to the rescue again. It simply transforms the device coordinates of the quad’s endpoints into texture coordinates, then writes them out to a new output variable:

out float gDist;

out vec2 gSpine;

uniform float HalfWidth;

void EmitEdge(vec2 P0, vec2 P1)

{

vec2 V = normalize(P1 - P0);

vec2 N = vec2(-V.y, V.x) * HalfWidth;

gSpine = (P0 + 1.0) * 0.5;

gDist = +HalfWidth;

gl_Position = vec4(P0 - N, 0, 1); EmitVertex();

gDist = -HalfWidth;

gl_Position = vec4(P0 + N, 0, 1); EmitVertex();

gSpine = (P1 + 1.0) * 0.5;

gDist = +HalfWidth;

gl_Position = vec4(P1 - N, 0, 1); EmitVertex();

gDist = -HalfWidth;

gl_Position = vec4(P1 + N, 0, 1); EmitVertex();

EndPrimitive();

}

Next we enhance the fragment shader to issue a discard for fragments that fail the custom depth test:

in float gDist;

in vec2 gSpine;

out vec4 fColor;

uniform float HalfWidth;

uniform sampler2D DepthSampler;

uniform vec2 Size;

void main()

{

vec2 texCoord = gSpine;

float depth = texture2D(DepthSampler, texCoord).r;

if (depth < gl_FragCoord.z)

discard;

float alpha = 1.0;

float d = abs(gDist);

float tipLength = 2.0 * fwidth(d);

if (d > HalfWidth - tipLength)

alpha = 1.0 - (d - HalfWidth + tipLength) / tipLength;

fColor = vec4(0, 0, 0, alpha);

}

Here’s the result. Note that the fin now extends below the contour, allowing you to see the AA on both ends.

Mind the Gap!

Next let’s fill in those gaps, creating the illusion of a single, seamless line. Some researchers create new triangles on either end of the fin, using vertex normals to determine the shape of those triangles. In practice I found this tricky to work with, especially since vertex normals are usually intended for 3D lighting, not screen-space effects. I found it easier to simply extend the lengths of the quads that I’m already generating. Sure, this causes too much overlap in some places, but it doesn’t seem to hurt the final image quality much. The percentage by which to extend the fin length is controlled via the OverhangLength shader uniform in the following snippet:

out float gDist;

out vec2 gSpine;

uniform float HalfWidth;

uniform float OverhangLength;

void EmitEdge(vec2 P0, vec2 P1)

{

vec2 E = OverhangLength * (P1 - P0);

vec2 V = normalize(E);

vec2 N = vec2(-V.y, V.x) * HalfWidth;

gSpine = (P0 + 1.0) * 0.5;

gDist = +HalfWidth;

gl_Position = vec4(P0 - N - E, 0, 1); EmitVertex();

gDist = -HalfWidth;

gl_Position = vec4(P0 + N - E, 0, 1); EmitVertex();

gSpine = (P1 + 1.0) * 0.5;

gDist = +HalfWidth;

gl_Position = vec4(P1 - N + E, 0, 1); EmitVertex();

gDist = -HalfWidth;

gl_Position = vec4(P1 + N + E, 0, 1); EmitVertex();

EndPrimitive();

}

Further Reading

These are some of the resources that gave me ideas for this blog post:

- There’s a nice article at Gamasutra about silhouette rendering with DirectX 10, written by an old colleague of mine, Josh Doss.

- Achieving AA by drawing lines on top of the model is called edge overdraw, which was written about in this 2001 paper by Hughes Hoppe and others.

- My silhouette lines are centered at the boundary, rather than extending only in the outwards direction. To achieve this, I use the “spine test”, which was introduced in a nice paper entitled Two Fast Methods for High-Quality Line Visibility, by Forrester Cole and Adam Finkelstein.

- To learn how to generate texture coordinates for fins (e.g., sketchy lines), and a different way of dealing with the gap between fins, check out a paper entitled Single Pass GPU Stylized Edges, by Hermosilla and Vázquez.

Downloads

The demo code uses a subset of the Pez ecosystem, which is included in the zip below.

I consider this code to be on the public domain.